Chapter 5. Autonomous Agents

Life is a journey, not a destination.

—Ralph Waldo Emerson

So far, I’ve been demonstrating inanimate objects, lifeless shapes sitting on the canvas that flop around when affected by forces in their environment. But this is The Nature of Code. What if I could breathe life into those shapes? What if those shapes could live by their own rules? Can shapes have hopes and dreams and fears? These sorts of questions are the domain of this chapter. They’re what separate unthinking objects from something much more interesting: autonomous agents.

Forces from Within

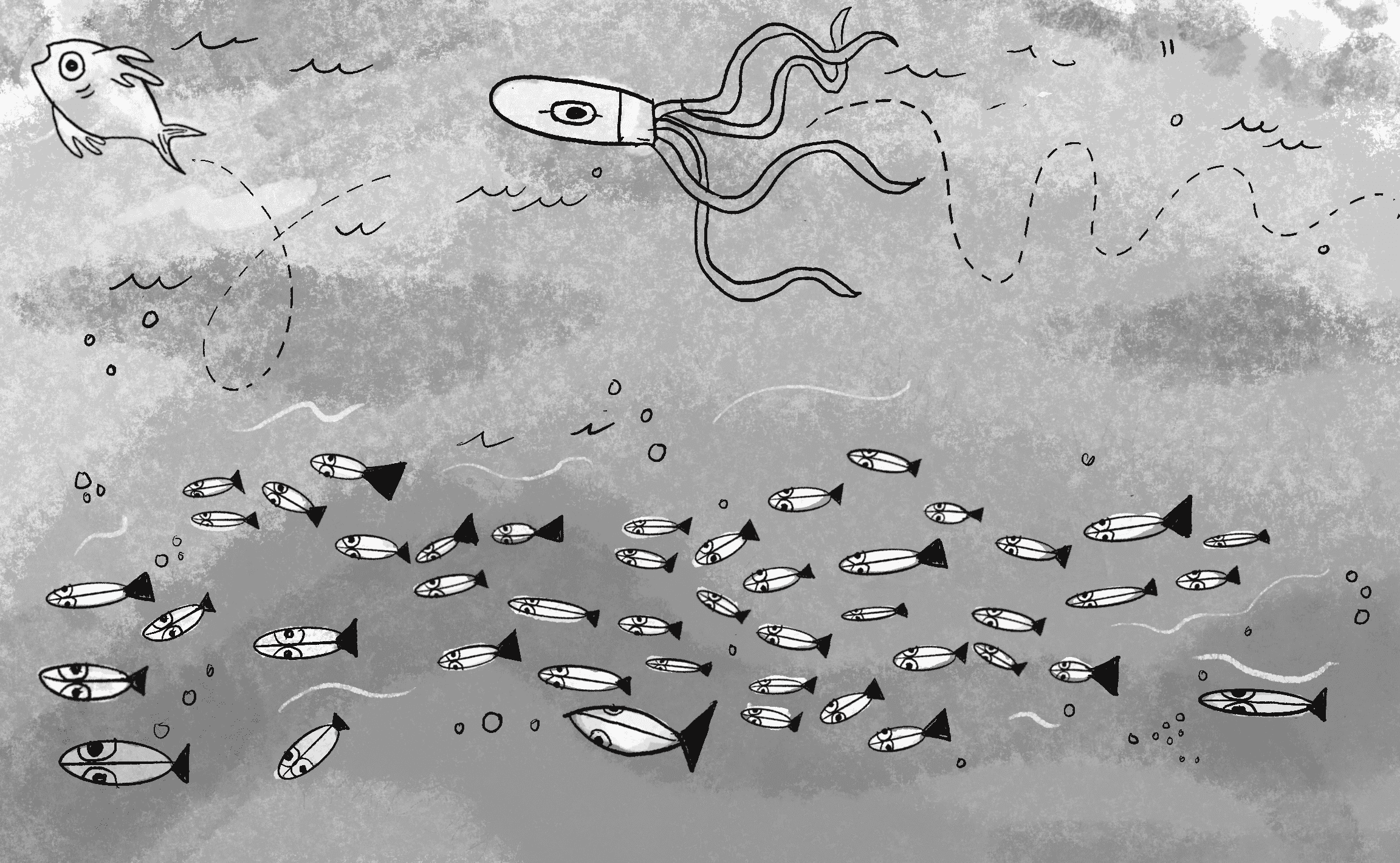

An autonomous agent is an entity that makes its own choices about how to act in its environment, without any influence from a leader or global plan. In this book, acting typically means moving. For example, instead of simulating a particle that’s passively drawn toward or repelled by another shape because of a force like gravity, I’d now like to design an entity that has the ability—or even the “desire”—to make decisions about its movements. It could decide to move toward or away from a target, like a moth drawn to a flame or a small fish evading a predator.

The switch from inanimate objects to autonomous agents is a significant conceptual leap, but the codebase will barely change. The desire for an autonomous agent to move is just another force, like the force of gravity or the force of the wind. It’s just that now the force is coming from within.

Here are three key components of autonomous agents to keep in mind as I build this chapter’s examples:

- An autonomous agent has a limited ability to perceive its environment. It makes sense that a living, breathing being should be aware of its environment. However, this awareness doesn’t refer to just the external environment but also to the agent’s internal state—its position, velocity, and potentially other properties or even simulated emotions. Throughout the chapter, I’ll explore ways agents can take their own state into account when making decisions. I’ll also cover programming techniques for objects to store references to other objects and therefore “perceive” their surroundings. It’s important to consider the word limited here. Are you designing an all-knowing circle that flies around a canvas, aware of everything else in that canvas? Or are you creating a shape that can examine other shapes only within 15 pixels of itself? Of course, there’s no right answer to this question; it all depends on what you want. I’ll explore several possibilities throughout this chapter, but in general, limitations are good for creating a simulation that feels more “natural.” An insect, for example, may be aware of only the sights and smells that immediately surround it. To model a real-world creature, you could study the exact science of these limitations. Luckily, I can just make stuff up and try it out.

- An autonomous agent processes the information from its environment and calculates an action. This will be the easy part, as the action is a force. The environment might tell the agent that there’s a big, scary-looking shark swimming right at it, and the action will be a powerful force in the opposite direction.

- An autonomous agent has no leader. This third principle is something I care a little less about, depending on the context. For example, if you’re designing a system for which it makes sense to have a leader barking commands at various entities, then that’s what you’ll want to implement. Nevertheless, many of the chapter’s examples will have no leader for an important reason: toward the end of this chapter, I’ll examine group behaviors and look at designing collections of autonomous agents that exhibit the properties of complex systems. These are intelligent and structured group dynamics that emerge not from a leader, but from the local interactions of the elements themselves.

I could start my exploration of autonomous agents in many places. Artificial simulations of ant and termite colonies are fantastic demonstrations of systems of agents, for example. For more on this topic, I encourage you to read Turtles, Termites, and Traffic Jams by Mitchel Resnick (Bradford Books, 1997). However, I want to begin by examining agent behaviors that build on the work in the first four chapters of this book: modeling motion with vectors and forces. And so I’ll return to the book’s ever-changing hero class—once Walker, then Mover, then Particle—and give it yet another incarnation.

Vehicles and Steering

In the late 1980s, computer scientist Craig Reynolds developed algorithmic steering behaviors for animated characters. These behaviors allowed individual elements to navigate their digital environments in a lifelike manner, with strategies for fleeing, wandering, arriving, pursuing, evading, and more. Later, in his 1999 paper “Steering Behaviors for Autonomous Characters,” Reynolds uses the word vehicle to describe his autonomous agents. I’ll follow suit, calling my autonomous agent class Vehicle:

class Vehicle {

constructor() {

this.position = createVector();

this.velocity = createVector();

this.acceleration = createVector();

}

/* What else do I need to add? */

Like the Mover and Particle classes before it, the Vehicle class’s motion is controlled through its position, velocity, and acceleration vectors. This will make the steering behaviors of a single autonomous agent straightforward to implement. Yet by building a system of multiple vehicles that steer themselves according to simple, locally based rules, surprising levels of complexity emerge. The most famous example is Reynolds’s boids model for flocking or swarming behavior, which I’ll demonstrate in Example 5.11.

Why Vehicles?

In his book Vehicles: Experiments in Synthetic Psychology (Bradford Books, 1986), Italian neuroscientist and cyberneticist Valentino Braitenberg describes a series of hypothetical vehicles with simple internal structures, writing, “This is an exercise in fictional science, or science fiction, if you like that better.” Braitenberg argues that his extraordinarily simple mechanical vehicles manifest behaviors such as fear, aggression, love, foresight, and optimism. Reynolds took his inspiration from Braitenberg, and I’ll take mine from Reynolds.

Reynolds describes the motion of idealized vehicles—idealized because he wasn’t concerned with their actual engineering, but rather started with the assumption that they work and respond to the rules defined. These vehicles have three layers:

- Action selection: A vehicle has a goal (or goals) and can choose an action (or a combination of actions) based on that goal. This is essentially where I left off in the discussion of autonomous agents. The vehicle takes a look at its environment and selects an action based on a desire: “I see a zombie marching toward me. Since I don’t want my brains to be eaten, I’m going to flee from the zombie.” The goal is to keep one’s brains, and the action is to flee. Reynolds’s paper describes many goals and associated actions, such as seeking a target, avoiding an obstacle, and following a path. In a moment, I’ll start building out these examples with p5.js code.

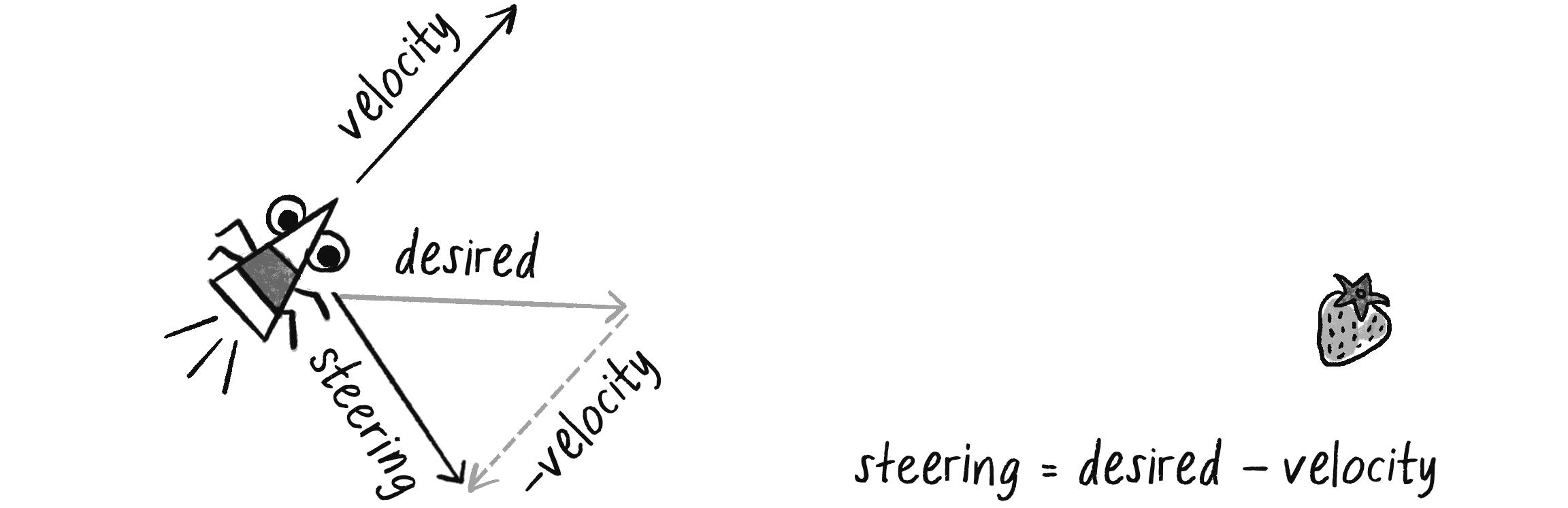

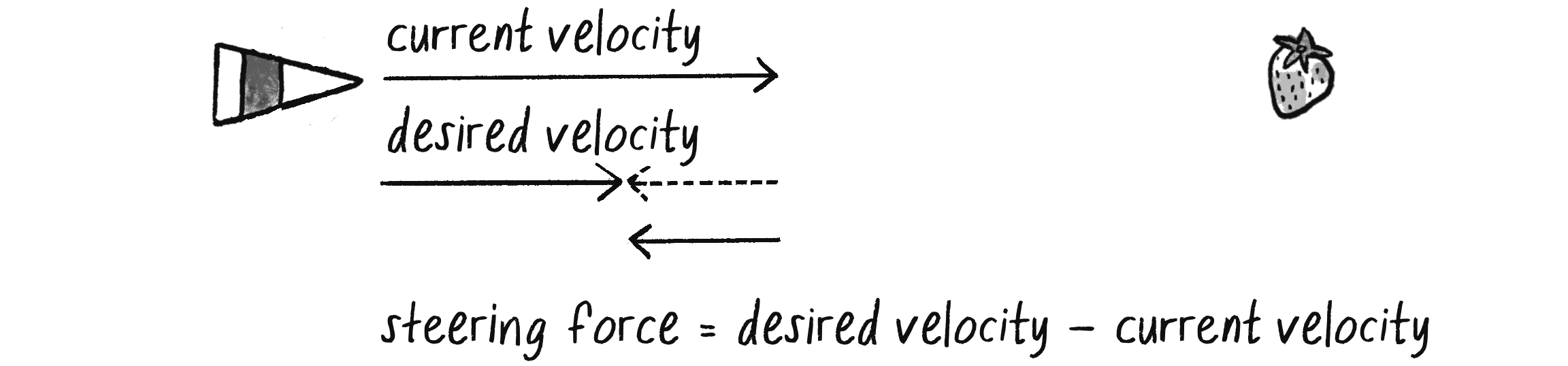

- Steering: Once an action has been selected, the vehicle has to calculate its next move. That next move will be a force—more specifically, a steering force. Luckily, Reynolds has developed a simple steering force formula that I’ll use throughout the examples in this chapter: steering force = desired velocity – current velocity. I’ll get into the details of this formula and why it works so effectively in the next section.

- Locomotion: For the most part, I’m going to ignore this third layer. In the case of fleeing from zombies, the locomotion could be described as “left foot, right foot, left foot, right foot, as fast as you can.” In a canvas, however, a rectangle, circle, or triangle’s actual movement across a window is irrelevant, given that the motion is all an illusion in the first place. This isn’t to say that you should ignore locomotion entirely, however. You’ll find great value in thinking about the locomotive design of your vehicle and how you choose to animate it. The examples in this chapter will remain visually bare; a good exercise would be to elaborate on the animation style. For example, could you add spinning wheels, oscillating paddles, or shuffling legs?

Ultimately, the most important layer for you to consider is the first one, action selection. What are the elements of your system, and what are their goals? In this chapter, I’m going to cover a series of steering behaviors (that is, actions): seeking, fleeing, following a path, following a flow field, flocking with your neighbors, and so on. As I’ve said in other chapters, however, the point isn’t that you should use these exact behaviors in all your projects. Rather, the point is to show you how to model a steering behavior—any steering behavior—in code, and to provide a foundation for designing and developing your own vehicles with new and exciting goals and behaviors.

What’s more, even though the examples in this chapter are highly literal (follow that pixel!), you should allow yourself to think more abstractly (like Braitenberg). What would it mean for your vehicle to have “love” as its goal or “fear” as its driving force? Finally (and I’ll address this in “Combining Behaviors”), you won’t get very far by developing simulations with only one action. Yes, the first example’s action will be to seek a target. But by being creative—by making these steering behaviors your own—it will all come down to mixing and matching multiple actions within the same vehicle. View the coming examples not as singular behaviors to be emulated, but as pieces of a larger puzzle that you’ll eventually assemble.

The Steering Force

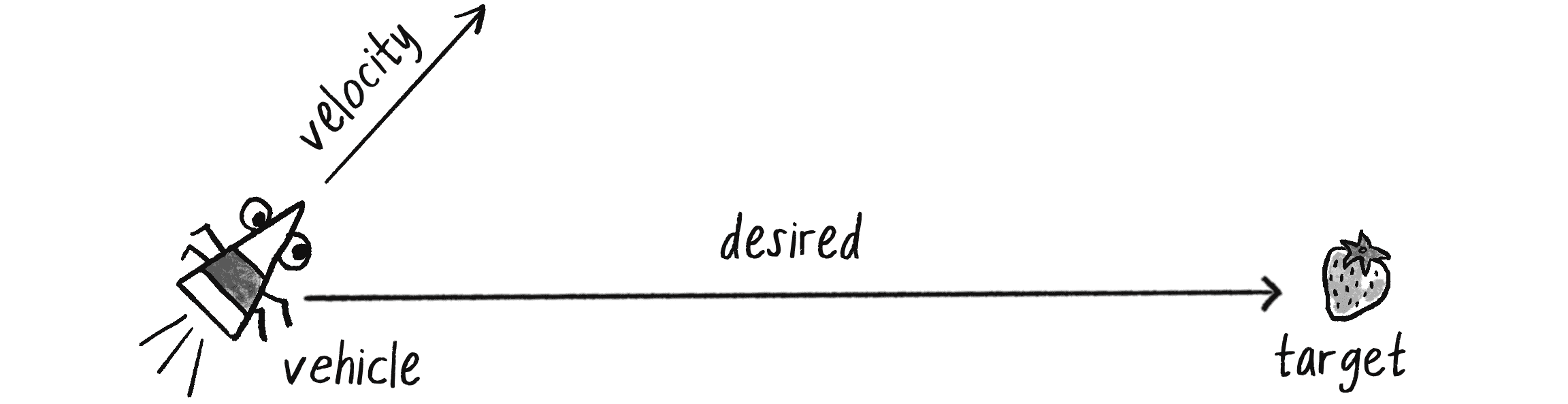

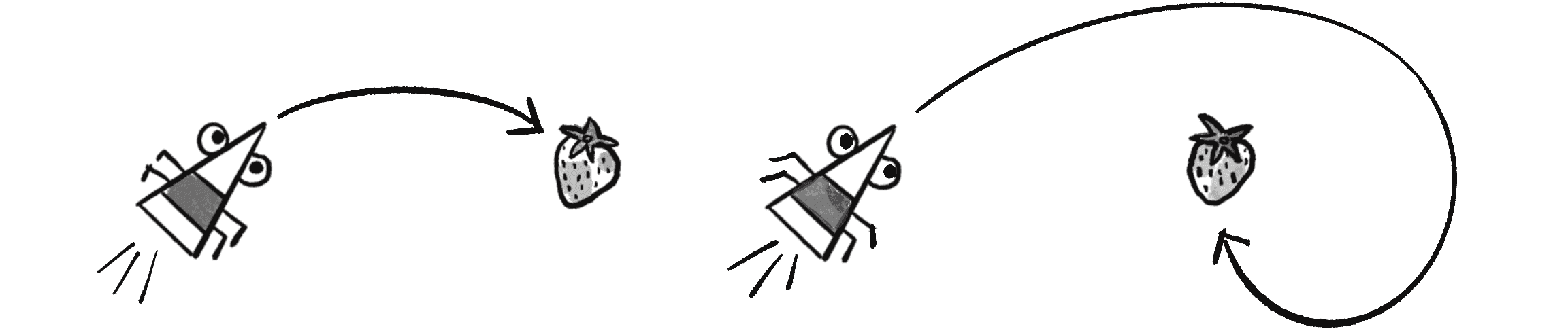

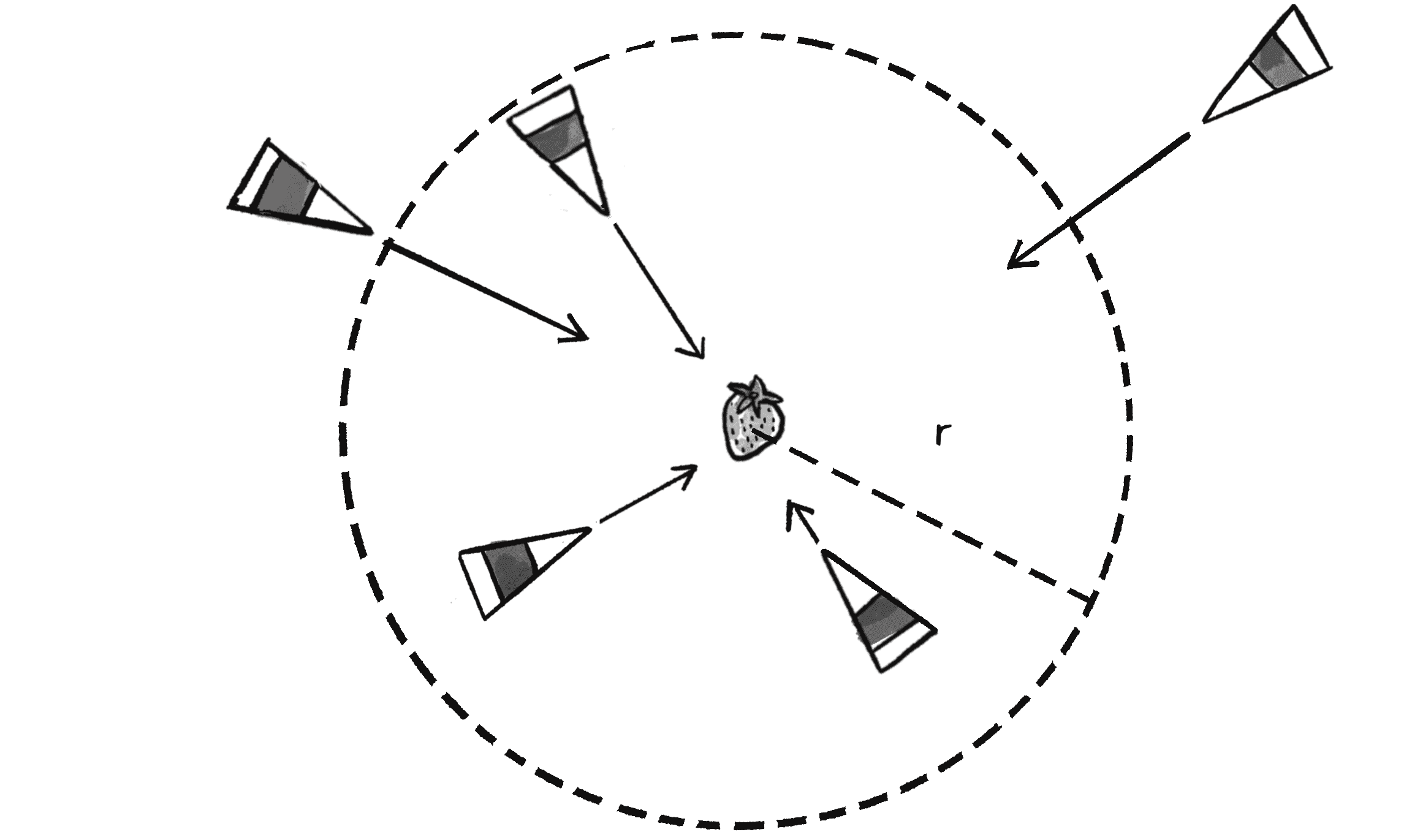

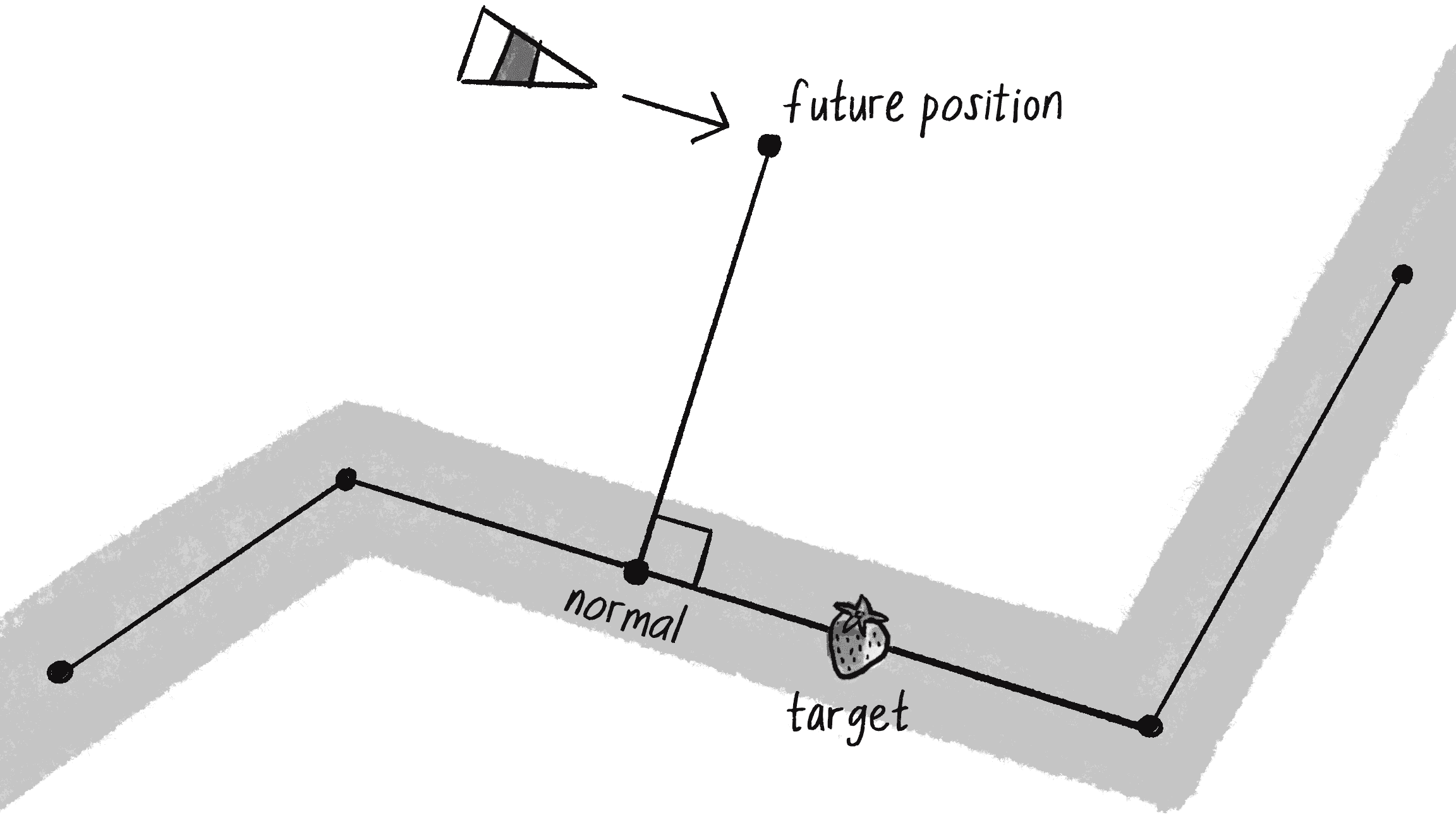

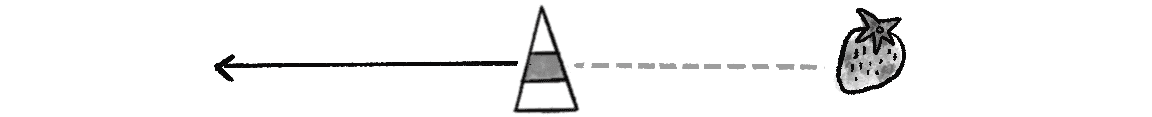

What exactly is a steering force? To answer, consider the following scenario: a vehicle with a current velocity is seeking a target. For fun, let’s think of the vehicle as a bug-like creature that desires to savor a delicious strawberry, as in Figure 5.1.

The vehicle’s goal and subsequent action is to seek the target. Thinking back to Chapter 2, you might begin by making the target an attractor and applying a gravitational force that pulls the vehicle to the target. This would be a perfectly reasonable solution, but conceptually it’s not what I’m looking for here.

I don’t want to simply calculate a force that pushes the vehicle toward its target; rather, I want to ask the vehicle to make an intelligent decision to steer toward the target based on its perception of its own state (its speed and the direction in which it’s currently moving) and its environment (the location of the target). The vehicle should consider how it desires to move (a vector pointing to the target), compare that goal with how it’s currently moving (its velocity), and apply a force accordingly. That’s exactly what Reynolds’s steering force formula says:

Or, as you might write in p5.js:

let steer = p5.Vector.sub(desired, velocity);

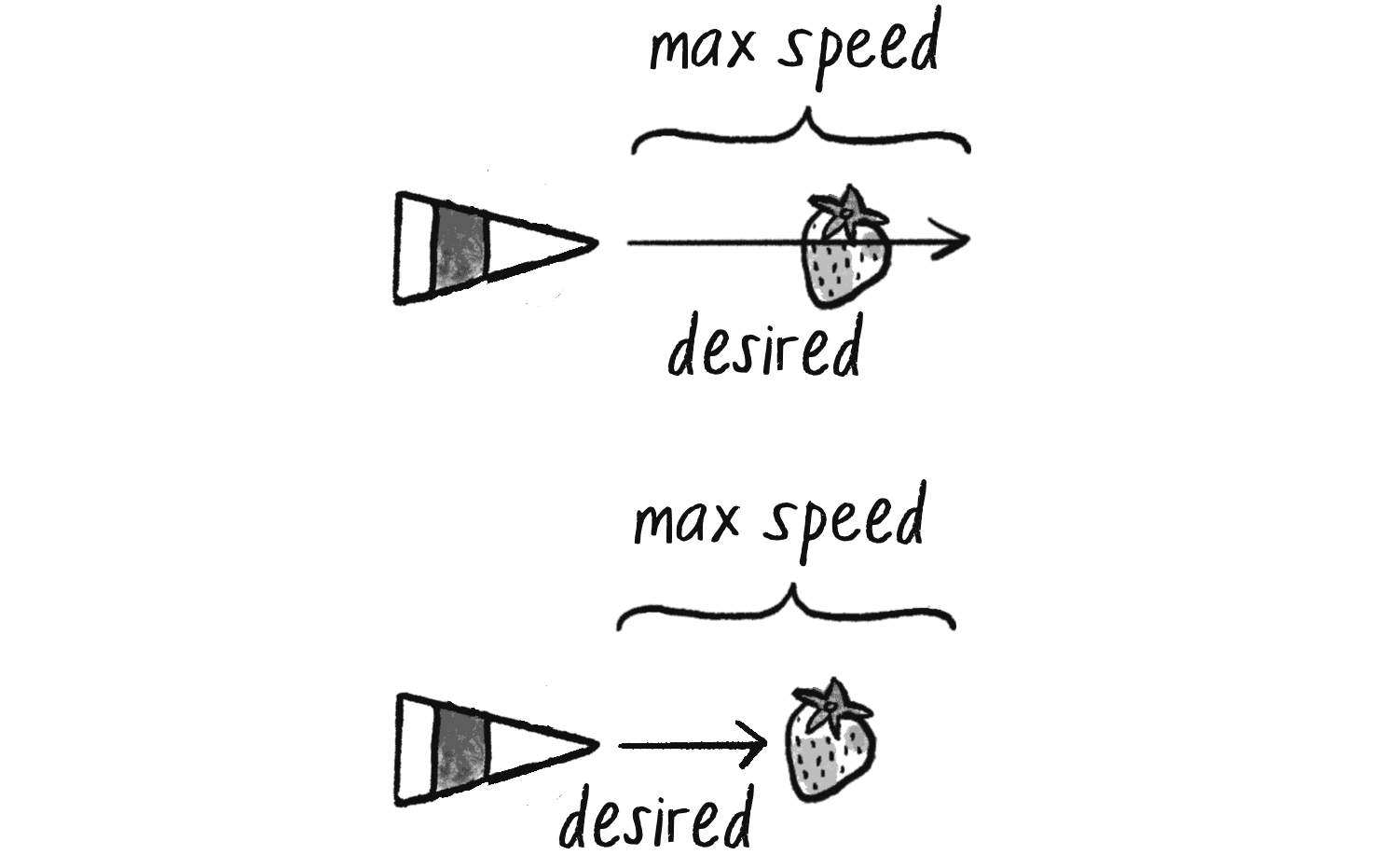

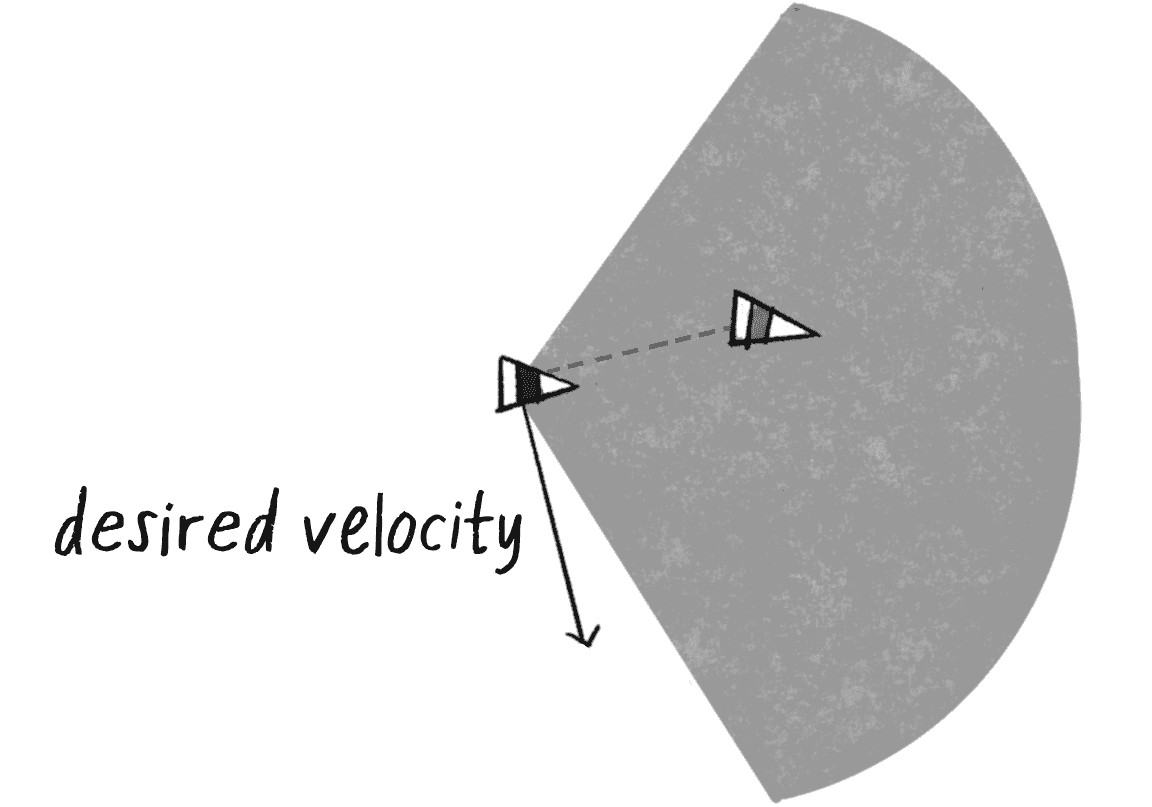

The current velocity isn’t a problem: the Vehicle class already has a variable for that. However, the desired velocity has to be calculated. Take a look at Figure 5.2. If the vehicle’s goal is defined as seeking the target, then its desired velocity is a vector that points from its current position to the target position.

Assuming a p5.Vector called target defining the target’s position, I then have this:

let desired = p5.Vector.sub(target, position);

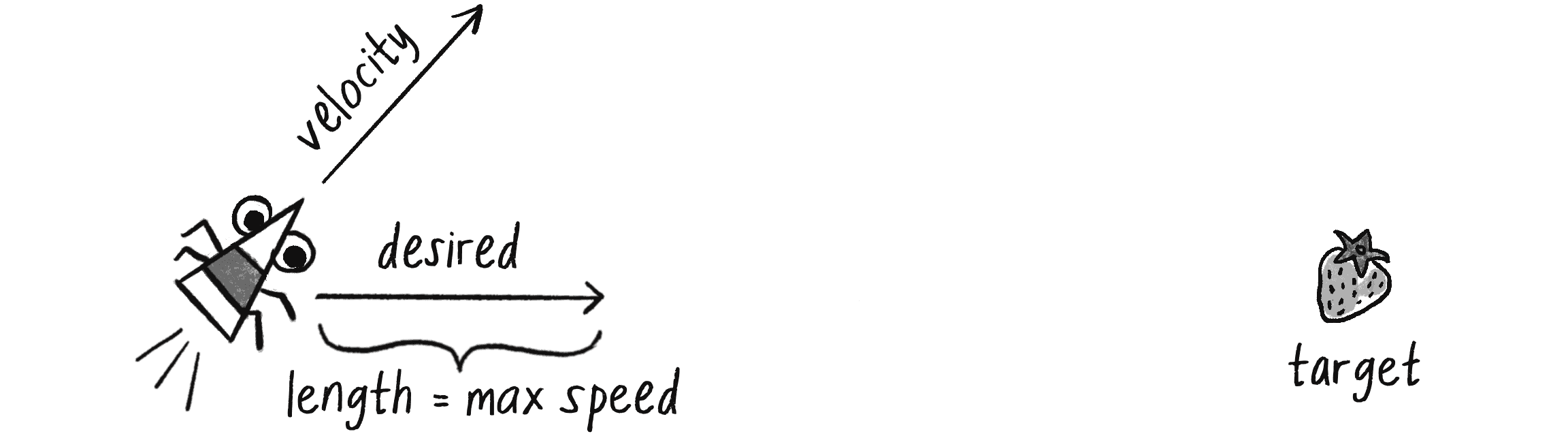

There’s more to the story, however. What if it is a high-resolution canvas and the target is thousands of pixels away? Sure, the vehicle might desire to teleport itself instantly to the target position with a massive velocity, but this won’t make for an effective animation. I’ll restate the desire as follows:

The vehicle desires to move toward the target at the maximum possible speed.

In other words, the desired vector should point from the vehicle’s current position to the target position, with a magnitude equal to the maximum speed of the vehicle, as shown in Figure 5.3.

The concept of maximum speed was introduced in Chapter 1 to ensure that a mover’s speed remained within a reasonable range. However, I didn’t always use it in the subsequent chapters. In Chapter 2, other forces such as friction and drag kept the speed in check, while in Chapter 3, oscillation was caused by opposing forces that kept the speed limited. In this chapter, maximum speed is a key parameter for controlling the behavior of a steering agent, so I’ll include it in all the examples.

While I encourage you to consider how other forces such as friction and drag could be combined with steering behaviors, I’m going to focus only on steering forces for the time being. As such, I can include the concept of maximum speed as a limiting factor in the force calculation. First, I need to add a property to the Vehicle class setting the maximum speed:

class Vehicle {

constructor() {

this.position = createVector();

this.velocity = createVector();

this.acceleration = createVector();

this.maxspeed = ????;

Maximum speed

}

Then, in the desired velocity calculation, I’ll scale according to maximum speed:

let desired = p5.Vector.sub(target, this.position);

desired.setMag(this.maxspeed);

Putting this all together, I can now write a method called seek() that receives a p5.Vector target and calculates a steering force toward that target:

seek(target) {

let desired = p5.Vector.sub(target,this.position);

Calculate the desired velocity to target at max speed.

desired.setMag(this.maxspeed);

let steer = p5.Vector.sub(desired, this.velocity);

Reynolds’s formula for steering force

this.applyForce(steer);

Use the physics model and apply the force to the object’s acceleration.

}

Notice that I finish the method by passing the steering force into applyForce(). This assumes that the code is built on top of the foundation I developed in Chapter 2.

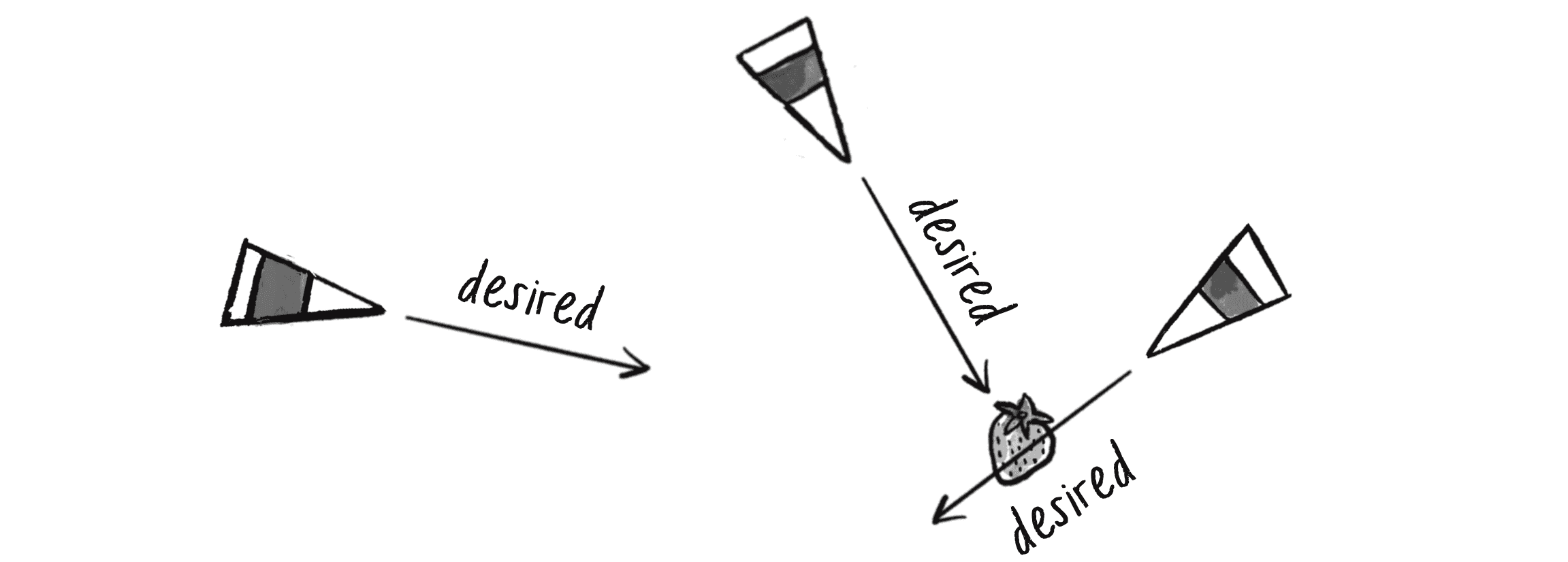

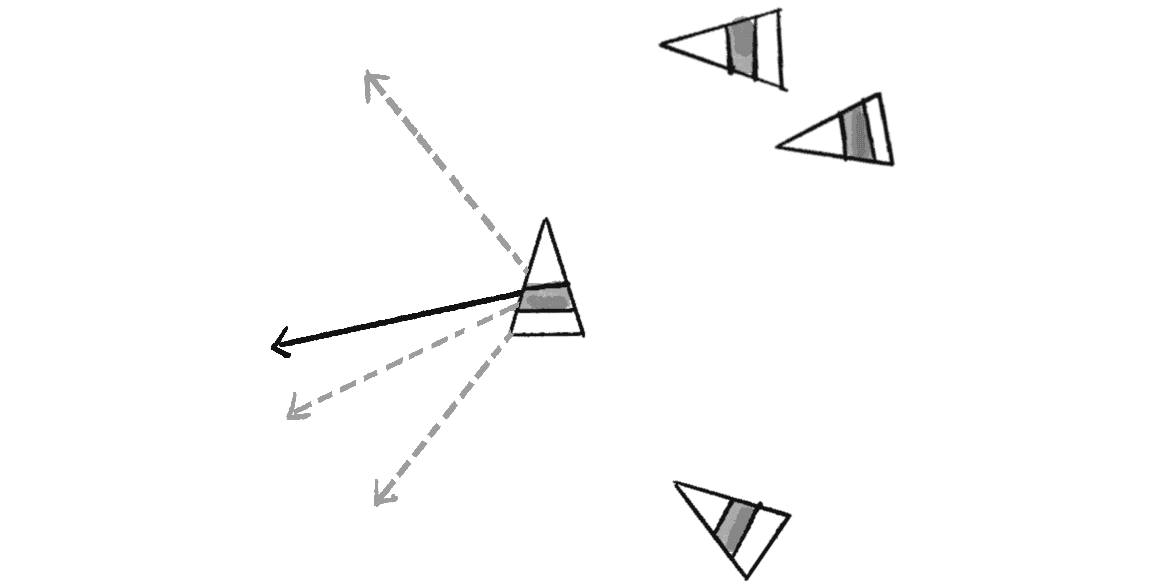

To see why Reynolds’s steering formula works so well, take a look at Figure 5.4. It shows what the steering force looks like relative to the vehicle and target positions.

This force looks quite different from gravitational attraction. Remember one of the principles of autonomous agents: an autonomous agent has a limited ability to perceive its environment, including its own state. Here’s that ability, subtly but powerfully embedded into Reynolds’s steering formula. In the case of gravitational attraction, the force pulling an object toward another is the same regardless of how that object is moving. But here, the vehicle is actively aware of its own velocity, and its steering force compensates accordingly. This adds a lifelike quality to the simulation, as the way in which the vehicle moves toward the target depends on its own understanding of its current motion.

In all this excitement, I’ve missed one last step. What sort of vehicle is this? Is it a super-sleek race car with amazing handling? Or a large city bus that needs a lot of advance notice to turn? A graceful panda or a lumbering elephant? The example code, as it stands, has no feature to account for this variation in steering ability. For that, I need to limit the magnitude of the steering force. I’ll call this limit the maximum force (or maxforce for short):

class Vehicle {

constructor() {

this.position = createVector();

this.velocity = createVector();

this.acceleration = createVector();

this.maxspeed = ????;

Maximum speed

this.maxforce = ????;

Now I also have a maximum force.

}

Now I just need to impose that limit before applying the steering force:

seek(target) {

let desired = p5.Vector.sub(target, this.position);

desired.setMag(this.maxspeed);

let steer = p5.Vector.sub(desired, this.velocity);

steer.limit(this.maxforce);

Limit the magnitude of the steering force.

this.applyForce(steer);

}

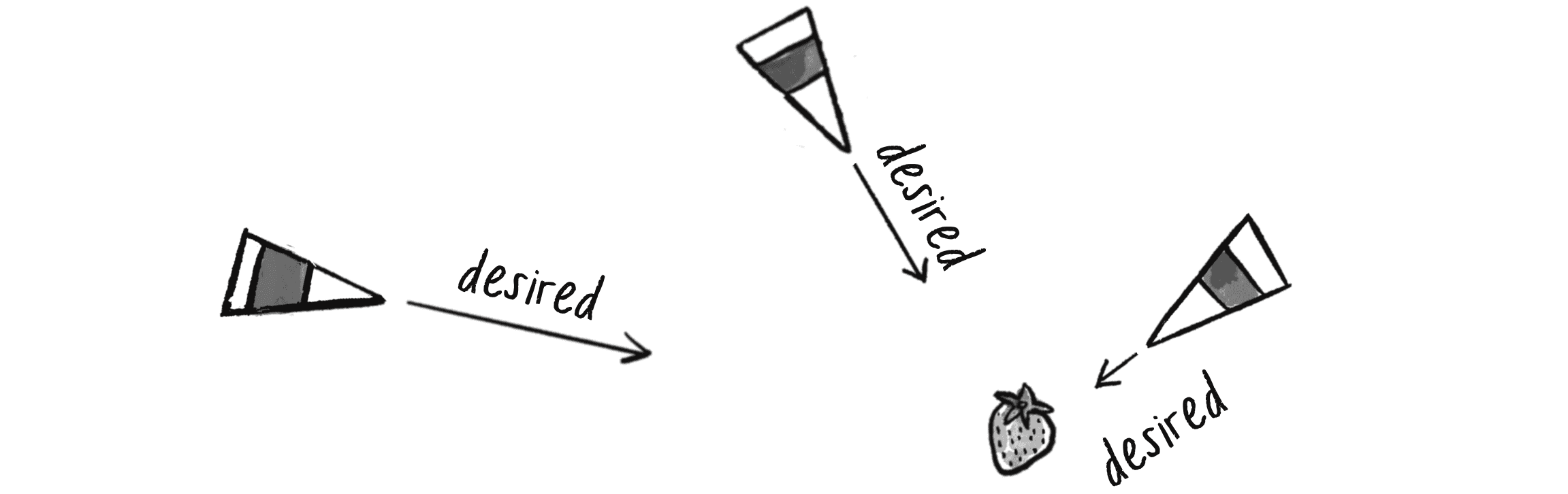

Limiting the steering force brings up an important point: the goal isn’t to get the vehicle to the target as fast as possible. If it were, I would just say, “Set position equal to target,” and the vehicle would instantly teleport to that location! Instead, as Reynolds puts it, the goal is to move the vehicle in a “lifelike and improvisational manner.”

I’m trying to make the vehicle appear to be steering its way to the target, so it’s up to me to play with the forces and variables of the system to simulate a given behavior. For example, a large maximum steering force would result in a very different path than a small one (see Figure 5.5). One isn’t inherently better or worse than the other; it depends on the desired effect. (And of course, these values need not be fixed and could change based on other conditions. Perhaps a vehicle has an energy property: the higher the energy, the better it can steer.)

Here’s the full Vehicle class, incorporating the rest of the elements from the Chapter 2 Mover class.

Example 5.1: Seeking a Target

class Vehicle {

constructor(x, y) {

this.position = createVector(x, y);

this.velocity = createVector(0, 0);

this.acceleration = createVector(0, 0);

this.r = 6.0;

Additional variable for size

this.maxspeed = 8;

this.maxforce = 0.2;

Arbitrary values for max speed and force; try varying these!

}

update() {

this.velocity.add(this.acceleration);

this.velocity.limit(this.maxspeed);

this.position.add(this.velocity);

this.acceleration.mult(0);

}

Standard update function

applyForce(force) {

this.acceleration.add(force);

}

Newton’s second law (skipping the math)

seek(target) {

let desired = p5.Vector.sub(target, this.position);

desired.setMag(this.maxspeed);

let steer = p5.Vector.sub(desired, this.velocity);

steer.limit(this.maxforce);

this.applyForce(steer);

}

The seek steering force algorithm

show() {

let angle = this.velocity.heading();

fill(127);

stroke(0);

push();

translate(this.position.x, this.position.y);

rotate(angle);

beginShape();

vertex(this.r * 2, 0);

vertex(-this.r * 2, -this.r);

vertex(-this.r * 2, this.r);

endShape(CLOSE);

pop();

The vehicle is a triangle pointing in the direction of velocity.

}

}

Note that, unlike the circles used to represent movers and particles in previous chapters, the Vehicle object is drawn as a triangle, defined as three custom vertices set with beginShape() and endShape(). This allows the vehicle to be represented in a way that indicates its direction, determined using the heading() method, as demonstrated in Chapter 3.

Exercise 5.1

Implement a fleeing steering behavior (the desired velocity is the same as seek, but pointed in the opposite direction).

Exercise 5.2

Create a sketch in which a vehicle’s maximum force and maximum speed don’t remain constant but vary according to environmental factors.

Exercise 5.3

Implement a seeking behavior with a moving target, often referred to as pursuit. In this case, your desired vector won’t point toward the object’s current position, but rather its future position as extrapolated from its current velocity. You’ll see this ability for a vehicle to “predict the future” in later examples. The solution is covered in the “Pursue and Evade” video on the Coding Train website.

The Arrive Behavior

After working for a bit with the seeking behavior, you’re probably asking yourself, “What if I want the vehicle to slow down as it approaches the target?” Before I can even begin to answer this question, I should explain why the seek behavior causes the vehicle to fly past the target in the first place, forcing it to turn around and go back. Consider the brain of a seeking vehicle. What is it thinking at each frame of the animation?

- I want to go as fast as possible toward the target.

- I want to go as fast as possible toward the target.

- I want to go as fast as possible toward the target.

- I want to go as fast as possible toward the target.

- I want to go as fast as possible toward the target.

- and so on . . .

The vehicle is so gosh darn excited about getting to the target that it doesn’t bother to make any intelligent decisions about its speed. No matter the distance to the target, it always wants to go as fast as possible. When the vehicle is very close, it will therefore end up overshooting the target (see Figure 5.6, top).

In some cases, this is the desired behavior. (Consider a puppy going after its favorite toy: it’s not slowing down, no matter how close it gets!) However, in many other cases (a car pulling into a parking spot, a bee landing on a flower), the vehicle’s thought process needs to consider its speed relative to the distance from its target (see Figure 5.6, bottom). For example:

- I’m very far away. I want to go as fast as possible toward the target.

- I’m somewhat far away. I still want to go as fast as possible toward the target.

- I’m getting close. I want to go more slowly toward the target.

- I’m almost there. I want to go very slowly toward the target.

- I’m there. I want to stop!

How can you implement this arriving behavior in code? Think back to the seek() method. Which part of the code sets the magnitude of the desired velocity?

let desired = p5.Vector.sub(target, this.position);

desired.setMag(this.maxspeed);

This always sets the magnitude of the desired vector to maxspeed, as in Figure 5.7.

What if instead the desired velocity’s magnitude were equal to half the distance?

let desired = p5.Vector.sub(target, this.position);

desired.mult(0.5);

I’d still want to limit the magnitude of desired to no more than the maximum speed, to keep vehicles that are very far away from going ridiculously fast (Figure 5.8).

While this change nicely demonstrates the goal of tying the desired speed to the distance from the target, it’s not a particularly good solution. After all, 10 pixels away is rather close, and a desired speed of 5 is rather large. Something like a desired velocity with a magnitude equal to 5 percent of the distance might work better:

let desired = p5.Vector.sub(target, this.position);

desired.mult(0.05);

Reynolds describes an even more sophisticated approach. Imagine a circle around the target with a given radius r. If the vehicle is within that circle, it gradually slows down—from the maximum speed at the very edge of the circle to zero speed at the target (Figure 5.9).

In other words, if the distance from the target is less than r, the desired speed ranges from 0 to the maximum speed mapped according to that distance.

Example 5.2: Arriving at a Target

arrive(target) {

let desired = p5.Vector.sub(target, this.position);

let d = desired.mag();

The distance is the magnitude of the vector pointing from the position to the target.

if (d < 100) {

If we are closer than 100 pixels . . .

let m = map(d, 0, 100, 0, this.maxspeed);

desired.setMag(m);

. . . set the magnitude according to how close we are.

} else {

desired.setMag(this.maxspeed);

Otherwise, proceed at maximum speed.

}

let steer = p5.Vector.sub(desired, this.velocity);

The usual steering = desired – velocity

steer.limit(this.maxforce);

this.applyForce(steer);

}

The arrive behavior is a great demonstration of an autonomous agent’s perception of the environment—including its own state. This model differs from the inanimate forces of Chapter 2: a celestial body attracted to another body doesn’t know it is experiencing gravity, whereas a cheetah chasing its prey knows it’s chasing.

The key is in the way the forces are calculated. For instance, in the gravitational attraction sketch (Example 2.6), the force always points directly from the object to the target—the exact direction of the desired velocity. Here, by contrast, the vehicle perceives its distance to the target and adjusts its desired speed accordingly, slowing as it gets closer. The force on the vehicle itself is therefore based not just on the desired velocity but also on the desired velocity relative to its current velocity. The vehicle accounts for its own state as part of its assessment of the environment.

Put another way, the magic of Reynolds’s desired minus velocity equation is that it essentially makes the steering force a manifestation of the current velocity’s error: “I’m supposed to be going this fast in this direction, but I’m actually going this fast in another direction. My error is the difference between where I want to go and where I’m currently going.” Sometimes this can lead to seemingly unexpected results, as in Figure 5.10.

In this example of the arrive behavior, the vehicle is moving too fast toward the target. The steering force, or error, tells it to slow down by actually pointing in the opposite direction, away from the target. By contrast, with gravitational attraction, you would never have a force pointing away from the target, no matter how close the target is. Taking the error and applying it as a steering force results in more dynamic, lifelike simulations.

Your Own Behaviors

The first two examples I’ve covered—seek and arrive—boil down to calculating a single vector for each behavior: the desired velocity. In fact, every single one of Reynolds’s steering behaviors follows this same pattern. In this chapter, I’m going to walk through more of Reynolds’s behaviors—flow-field following, path following, and flocking. First, however, I want to emphasize again that these are examples—demonstrations of common steering behaviors that are useful in procedural animation. They aren’t the be-all and end-all of what you can do. As long as you can come up with a vector that describes a vehicle’s desired velocity, you’ve created your own steering behavior.

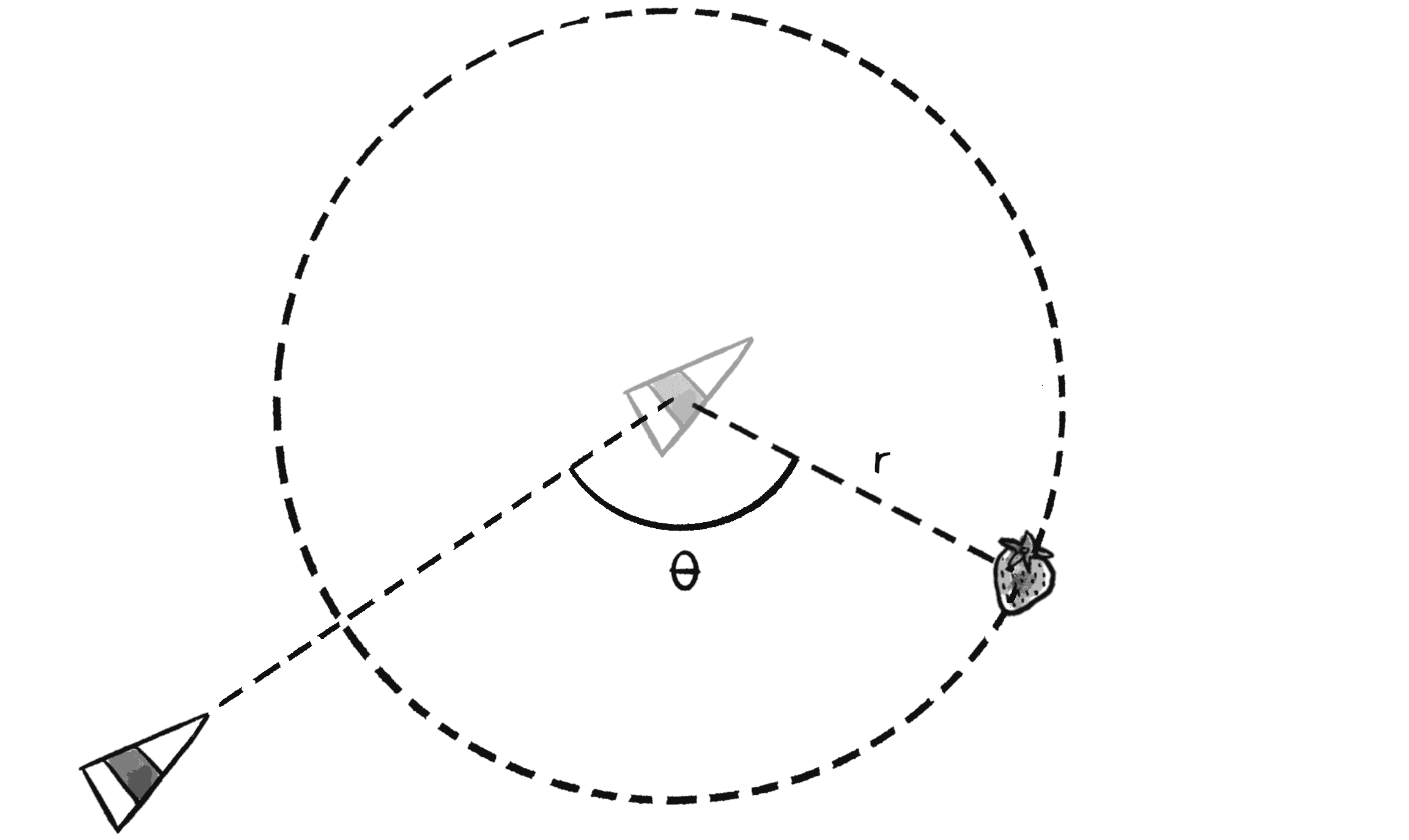

For example, let’s see how Reynolds defines the desired velocity for his wandering behavior:

Wandering is a type of random steering which has some long-term order: the steering direction on one frame is related to the steering direction on the next frame. This produces more interesting motion than, for example, simply generating a random steering direction each frame.

For Reynolds, the goal of wandering isn’t random motion, but rather a sense of moving in one direction for a little while, wandering off in the next direction for a little bit, and so on. Figure 5.11 illustrates how Reynolds calculates a target to seek in order to achieve such an effect.

First, the vehicle predicts its future position as a fixed distance in front of it (in the direction of its current velocity). Then it draws a circle with radius r centered on that position and picks a random point along the circumference of the circle. That point, which moves randomly around the circle for each frame of animation, is the vehicle’s target, so its desired velocity points in that direction.

Sounds absurd, right? Or, at the very least, a bit arbitrary. In fact, this is a clever and thoughtful solution—it uses randomness to drive a vehicle’s steering, but constrains that randomness along the path of a circle to keep the vehicle’s movement from appearing jittery and, well, totally random.

The seemingly random and arbitrary nature of this solution should drive home the point I’m trying to make: these are made-up behaviors, even if they’re inspired by real-life motion. You can just as easily concoct another elaborate scenario to compute a desired velocity. And you should!

Exercise 5.4

Write the code for Reynolds’s wandering behavior. Use polar coordinates to calculate the vehicle’s target along a circular path.

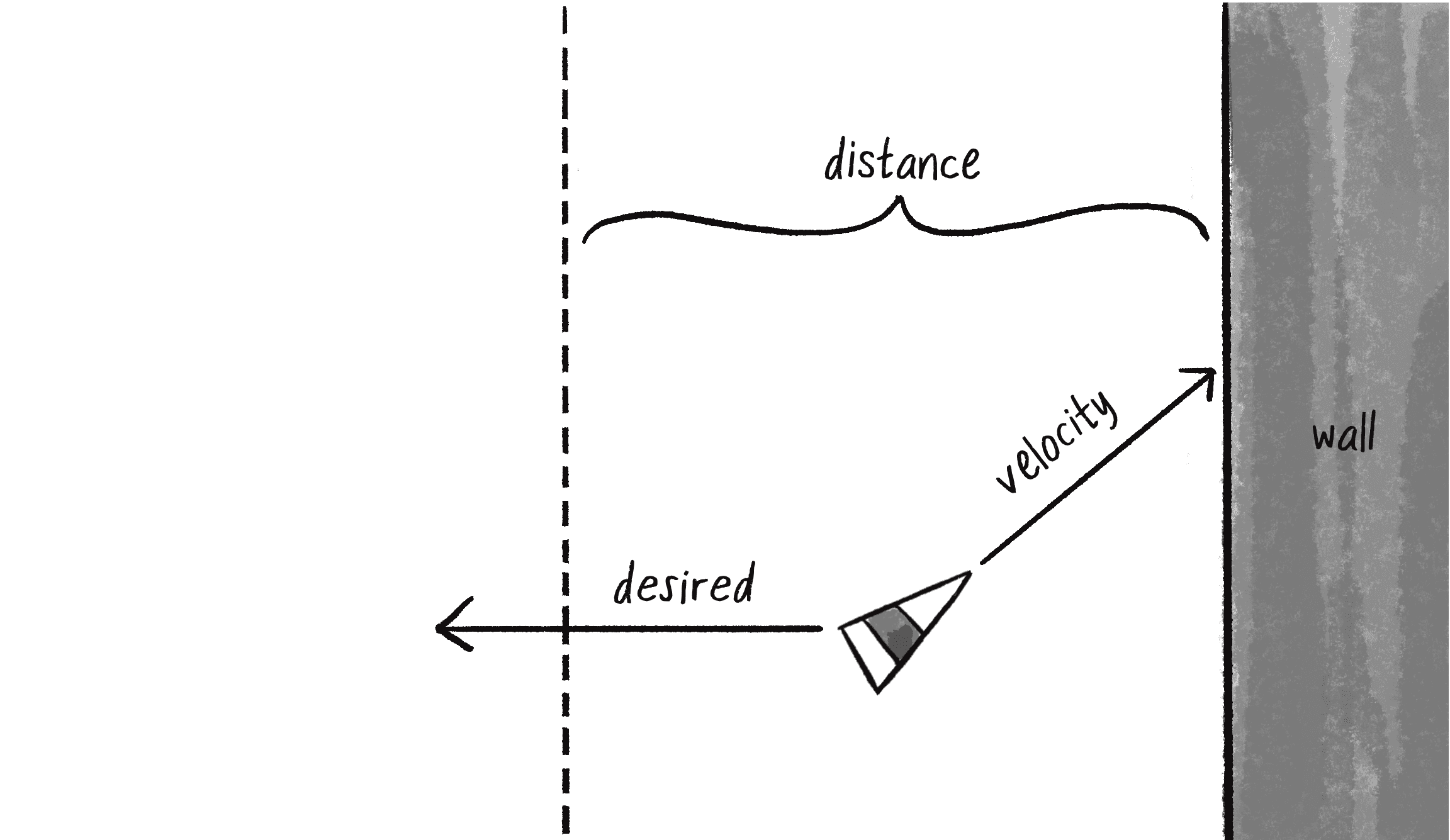

To give another example, say I want to create a steering behavior called stay within walls. To define the desired velocity, I’ll make a rule: if a vehicle comes within a distance d of a wall, that vehicle desires to move at maximum speed in the opposite direction of the wall (see Figure 5.12).

If I define the walls of the space as the edges of a canvas and an offset distance equal to 25, I can write the code for this with a series of if statements.

Example 5.3: “Stay Within Walls” Steering Behavior

boundaries(offset) {

The method receives an offset from the edges.

let desired = null;

Start with a null desired velocity.

if (this.position.x < offset) {

desired = createVector(this.maxspeed, this.velocity.y);

} else if (this.position.x > width - offset) {

desired = createVector(-this.maxspeed, this.velocity.y);

}

if (this.position.y < offset) {

desired = createVector(this.velocity.x, this.maxspeed);

} else if (this.position.y > height - offset) {

desired = createVector(this.velocity.x, -this.maxspeed);

}

Make a desired velocity that retains the y-direction of the vehicle but points the x-direction directly away from the canvas edges.

if (desired !== null) {

desired.normalize();

desired.mult(this.maxspeed);

let steer = p5.Vector.sub(desired, this.velocity);

steer.limit(this.maxforce);

this.applyForce(steer);

}

If the desired velocity is non-null, apply steering.

}

In this boundaries() method, you might be wondering why I set the desired velocity to null at the outset. Why not just set desired to a vector of 0? Remember, the steering force equals the desired velocity minus the current velocity! If the vehicle desires to move at 0 velocity, the resulting force would slow the vehicle to a stop. By initializing desired to null and checking that it’s non-null before applying the steering force, the vehicle won’t be affected at all when it’s comfortably away from the edges of the canvas.

Exercise 5.5

Come up with your own arbitrary scheme for calculating a desired velocity.

Flow Fields

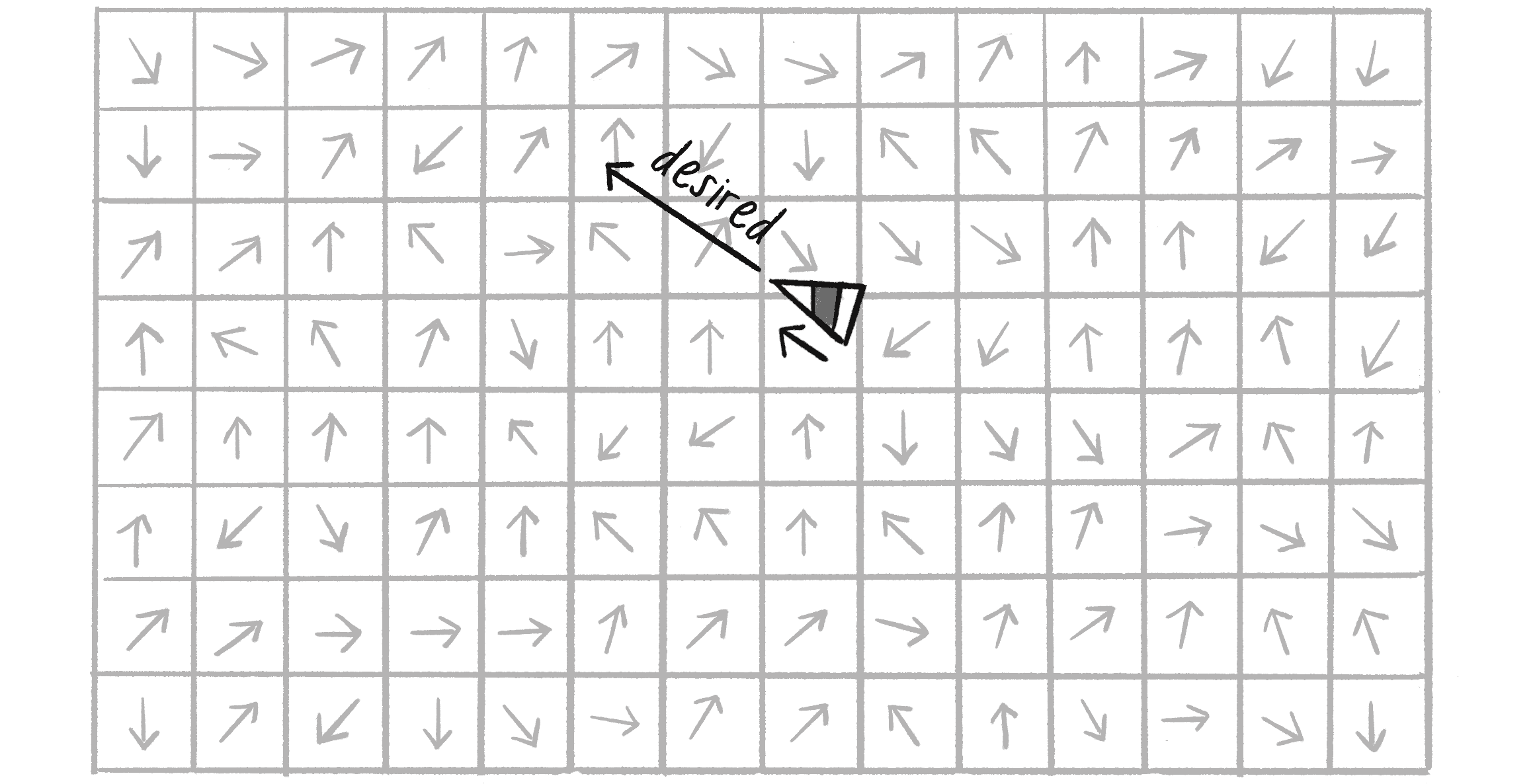

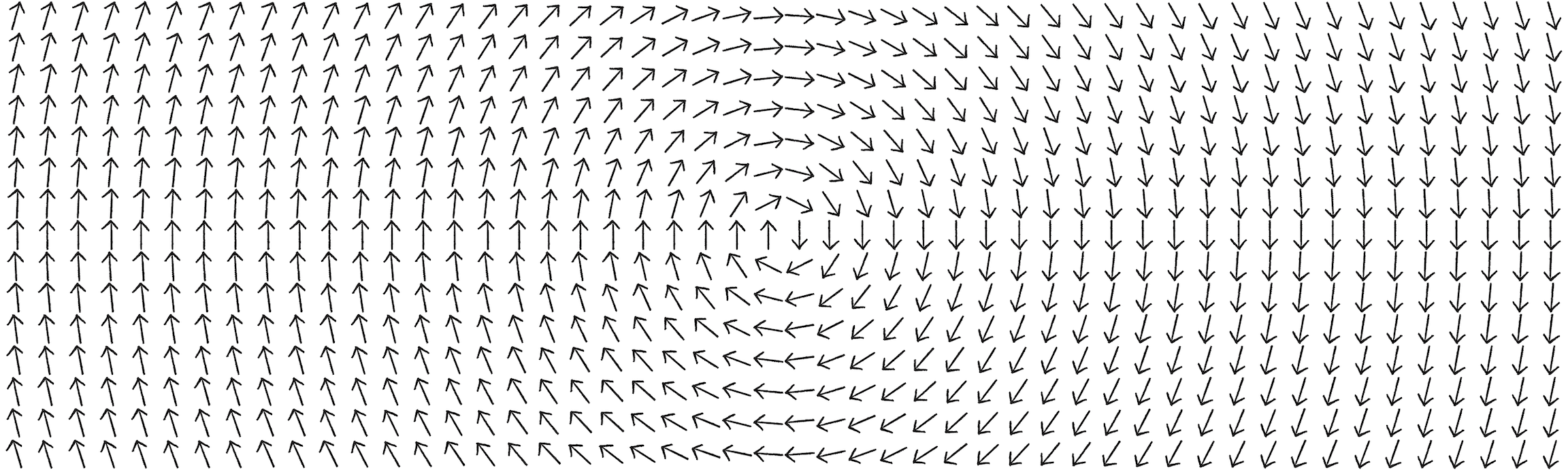

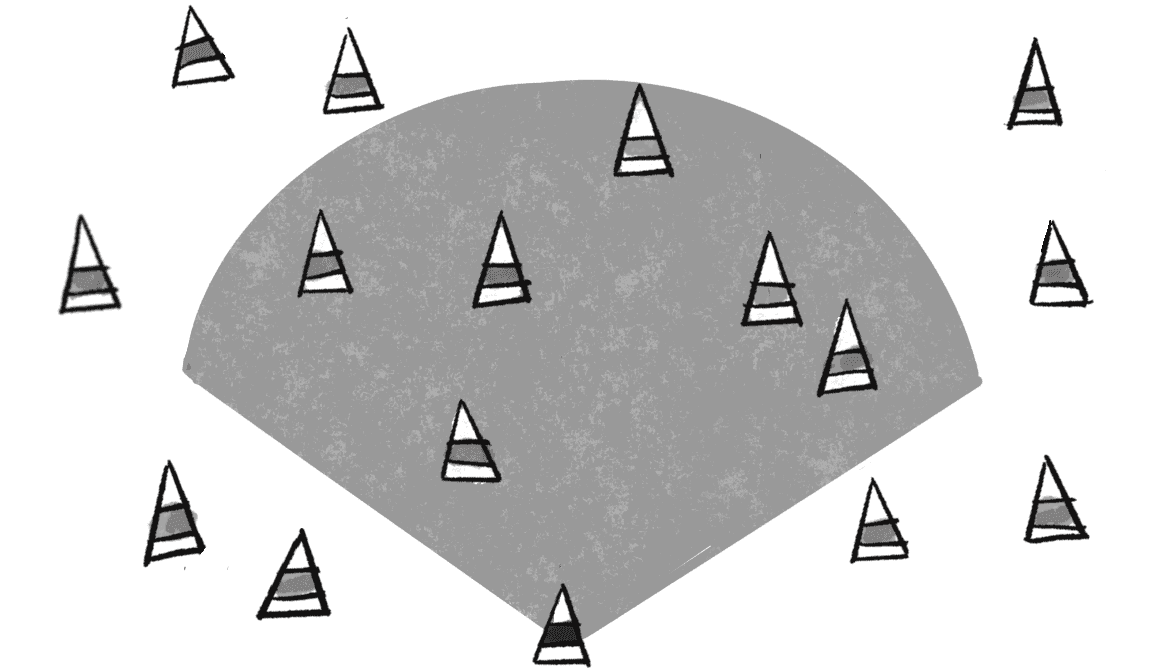

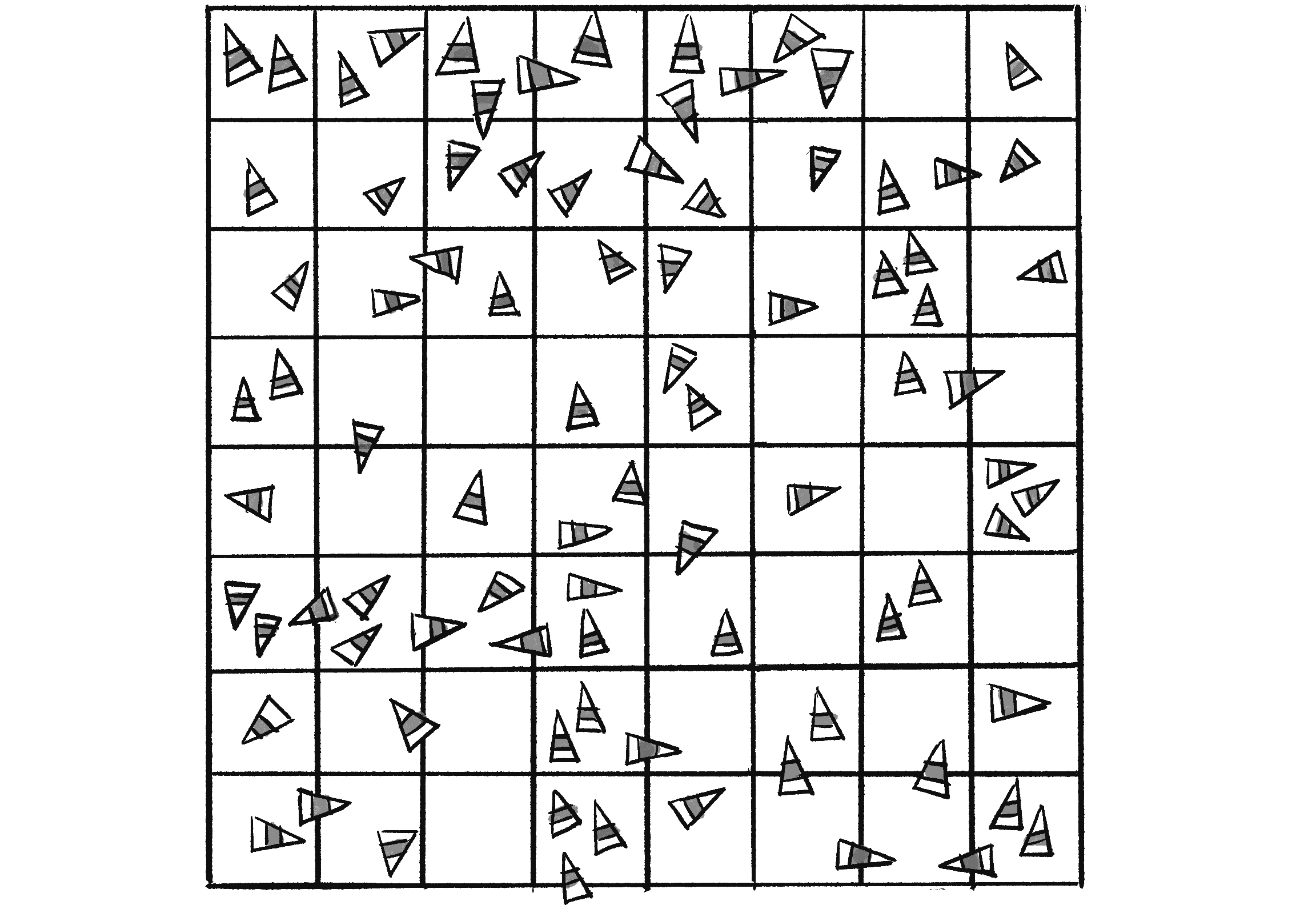

Another one of Reynolds’s steering behaviors is flow-field following. But what is a flow field? Think of the canvas as a grid (Figure 5.13). In each cell of the grid lives an arrow pointing in a certain direction—you know, a vector. As a vehicle moves around the canvas, it asks, “Hey, what arrow is beneath me? That’s my desired velocity!”

Reynolds’s own flow-field example involves the vehicle looking ahead to its future position and following the vector at that spot. For simplicity’s sake, however, I’ll instead have the vehicle follow the vector at its current position.

Before I can write the additional code for the Vehicle class to follow a flow field, I first need a class that describes the flow field. Since a flow field is essentially a grid of vectors, a 2D array is a convenient data structure to represent it, as I can reference each element with two indices, the cell’s column and row in the grid. If you aren’t familiar with 2D arrays, I suggest reviewing my video tutorial on “2D Arrays in JavaScript”.

class FlowField {

constructor() {

this.resolution = ????;

Resolution of the grid relative to the canvas width and height in pixels

this.cols = ????;

this.rows = ????;

How many columns and how many rows are in the grid?

this.field = new Array(this.cols);

for (let i = 0; i < this.cols; i++) {

this.field[i] = new Array(this.rows);

}

The field will be a 2D array of vectors.

}

How should I fill in the missing values? Let’s say I have a canvas that’s 200 pixels wide by 200 pixels high. In theory, I could make a flow field that has a vector for every single pixel, meaning 40,000 vectors total (). This isn’t a terribly unreasonable number, but in this context, one vector per pixel is overkill. I can easily get by with, say, one vector every 10 pixels (). My resolution variable sets the size of each cell in pixels. Then I can calculate the number of columns and rows based on the size of the canvas divided by the resolution:

constructor() {

this.resolution = 10;

this.cols = floor(width / this.resolution);

The total number of columns equals the width divided by the resolution.

this.rows = floor(height / this.resolution);

The total number of rows equals the height divided by the resolution.

this.field = new Array(this.cols);

for (let i = 0; i < this.cols; i++) {

this.field[i] = new Array(this.rows);

}

The field will be a 2D array of vectors.

}

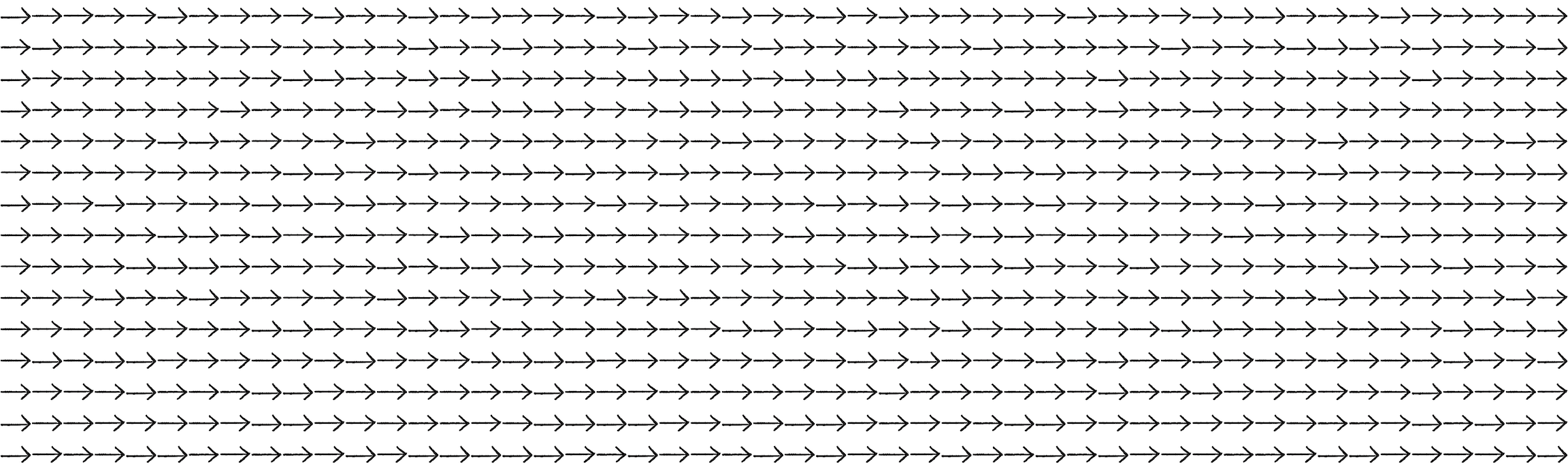

Now that I’ve set up the data structure for the flow field, it’s time to compute the flow field’s vectors. How do I do that? However I want! Perhaps I’d like every vector in the flow field pointing to the right (Figure 5.14).

For that, I can just set each vector to (1, 0).

for (let i = 0; i < this.cols; i++) {

for (let j = 0; j < this.rows; j++) {

Use a nested loop to hit every column and every row of the flow field.

this.field[i][j] = createVector(1, 0);

Arbitrary decision to make each vector point to the right

}

}

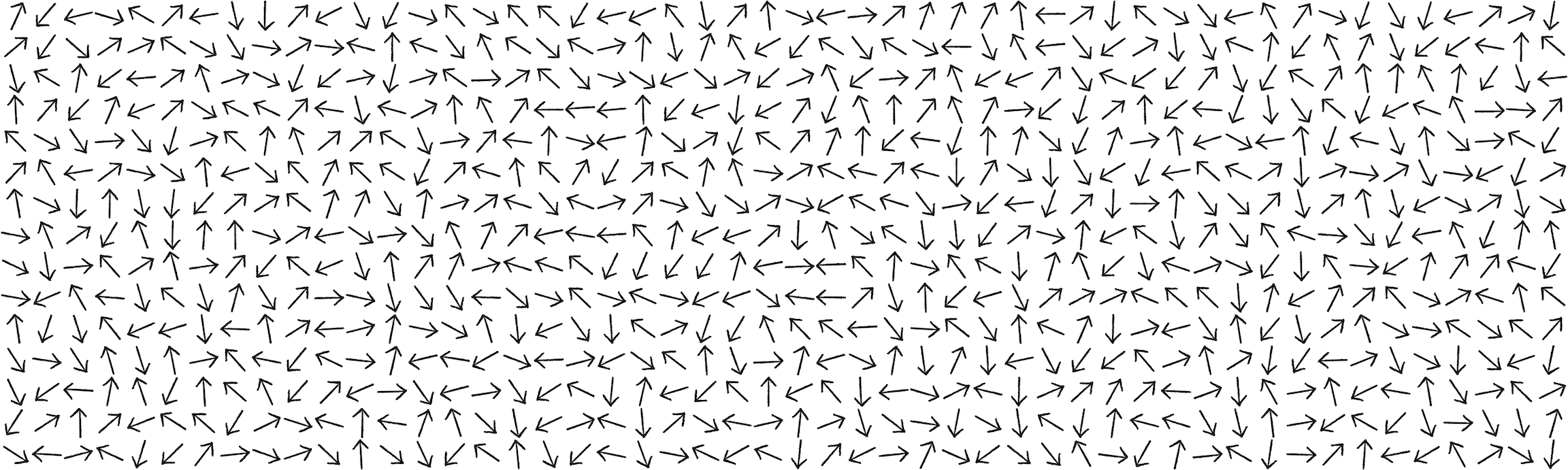

Maybe I’d prefer the vectors to point in random directions (Figure 5.15).

Easy. Just use the p5.Vector class’s random2D() method to assign each vector:

for (let i = 0; i < this.cols; i++) {

for (let j = 0; j < this.rows; j++) {

this.field[i][j] = p5.Vector.random2D();

A random vector

}

}

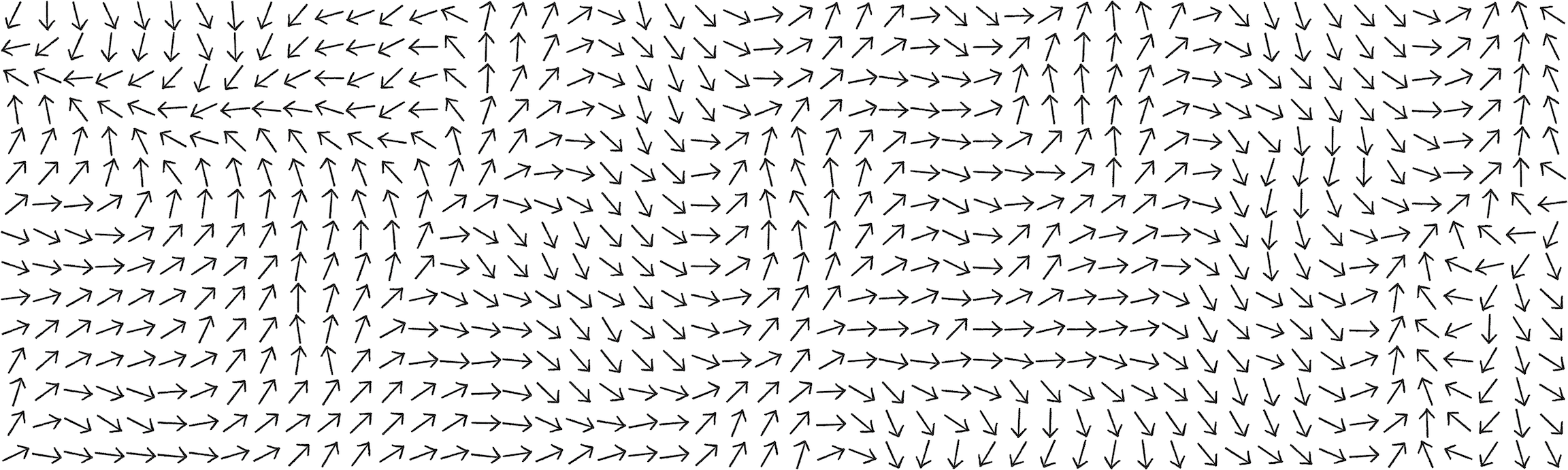

What about using 2D Perlin noise (Figure 5.16)?

Just map each noise value to an angle from to and create a vector from that angle:

let xoff = 0;

for (let i = 0; i < this.cols; i++) {

let yoff = 0;

for (let j = 0; j < this.rows; j++) {

let angle = map(noise(xoff, yoff), 0, 1, 0, TWO_PI);

2D noise

this.field[i][j] = p5.Vector.fromAngle(angle);

yoff += 0.1;

}

xoff += 0.1;

}

Now I’m getting somewhere. Calculating the direction of the vectors by using Perlin noise is a great way to simulate a variety of natural effects, such as irregular gusts of wind or the meandering path of a river. I’ll note, however, that this noise mapping generates a field that prefers flowing left. Since Perlin noise has a Gaussian-like distribution, angles near are more likely to be selected. For Figure 5.16, I used a range of 0 to to counteract this tendency, similarly to the way I applied in Chapter 4 to represent a range of angles for spinning confetti particles. Ultimately, of course, there’s no one correct way to calculate the vectors of a flow field; it’s up to you to decide what you’re looking to simulate.

Exercise 5.6

Write the code to calculate a flow field so that the vectors swirl in circles around the center of the canvas.

let x = i * width / cols;

let y = j * height / rows;

flowfield[i][j] = createVector(width / 2 - x, height / 2 - y);

flowfield[i][j].rotate(PI / 2);

Now that I have a 2D array storing the flow-field vectors, I need a way for the vehicle to look up its desired velocity. For that, I simply divide the vehicle’s x- and y-position by the resolution of the grid. This gives me the indices of the desired vector in the 2D array. For example, if the resolution is 10 and the vehicle is at (100, 50), I’ll want to look up column 10 and row 5:

let column = floor(this.position.x / this.resolution);

let row = floor(this.position.y / this.resolution);

Because a vehicle could theoretically wander off the p5.js canvas, employing the constrain() function helps ensure that I don’t look outside the bounds of the flow-field array. Here’s a method called lookup(), which I’ll add to the FlowField class, that receives a vector (the position of the vehicle) and returns the corresponding flow-field vector for that position:

lookup(position) {

let column = constrain(floor(position.x / this.resolution), 0, this.cols - 1);

let row = constrain(floor(position.y / this.resolution), 0, this.rows - 1);

Use constrain().

return this.field[column][row].copy();

Use copy() to ensure that a copy of the vector is returned.

}

Before moving on to the Vehicle class, let’s look at the FlowField class code all together, this time using Perlin noise to compute the vector directions:

class FlowField {

constructor(r) {

this.resolution = r;

this.cols = width / this.resolution;

this.rows = height / this.resolution;

Determine the number of columns and rows.

this.field = new Array(this.cols);

for (let i = 0; i < this.cols; i++) {

this.field[i] = new Array(this.rows);

}

A flow field is a 2D array of vectors. The example includes a separate function to create that array.

this.init();

}

init() {

The init() function fills the 2D array with vectors.

noiseSeed(random(10000));

let xoff = 0;

for (let i = 0; i < this.cols; i++) {

let yoff = 0;

for (let j = 0; j < this.rows; j++) {

Reseed noise for a new flow field each time.

let angle = map(noise(xoff, yoff), 0, 1, 0, TWO_PI);

this.field[i][j] = p5.Vector.fromAngle(angle);

yoff += 0.1;

In this example, use Perlin noise to create the vectors.

}

xoff += 0.1;

}

}

lookup(position) {

let column = constrain(floor(position.x / this.resolution), 0, this.cols - 1);

let row = constrain(floor(position.y / this.resolution), 0, this.rows - 1);

return this.field[column][row].copy();

}

A function to return a vector based on a position

}

Now let’s assume there’s a FlowField object called flow. Using that object’s lookup() method, a vehicle can then retrieve a desired velocity from the flow field and use Reynolds’s steering formula to calculate a force.

Example 5.4: Flow-Field Following

follow(flow) {

let desired = flow.lookup(this.position);

desired.setMag(this.maxspeed);

What is the vector at that spot in the flow field?

let steer = p5.Vector.sub(desired, this.velocity);

steer.limit(this.maxforce);

this.applyForce(steer);

Steering is desired minus velocity.

}

Notice that lookup() is a method of the FlowField class, rather than of Vehicle. While you certainly could place lookup() within the Vehicle class instead, from my perspective, placing it in FlowField aligns best with the OOP principle of encapsulation. The lookup task, which retrieves a vector based on a position from the flow field, is inherently tied to the data of the FlowField object.

You may also notice some familiar elements from Chapter 4, such as the use of an array of vehicles. Although the vehicles here operate independently, this is a great first step toward thinking about the group behaviors that I’ll introduce later in this chapter.

Exercise 5.7

Adapt the flow-field example so the vectors change over time. (Hint: Try using the third dimension of Perlin noise!)

Exercise 5.8

Can you create a flow field from an image? For example, try having the vectors point from dark to light colors (or vice versa).

Path Following

The next steering behavior formulated by Reynolds that I��’d like to explore is path following. But let me quickly clarify something first: the behavior here is path following, not path finding. Pathfinding refers to an algorithm that solves for the shortest distance between two points, often in a maze. With path following, a predefined route, or path, already exists, and the vehicle simply tries to follow it.

In this section, I will work through the algorithm, including the corresponding mathematics and code. However, before doing so, it’s important to cover a key concept in vector math that I skipped over in Chapter 1: the dot product. I haven’t needed it yet, but it’s necessary here and likely will prove quite useful for you beyond just this example.

The Dot Product

Remember all the vector math covered in Chapter 1? Add, subtract, multiply, and divide? Figure 5.17 has a recap of some of these operations.

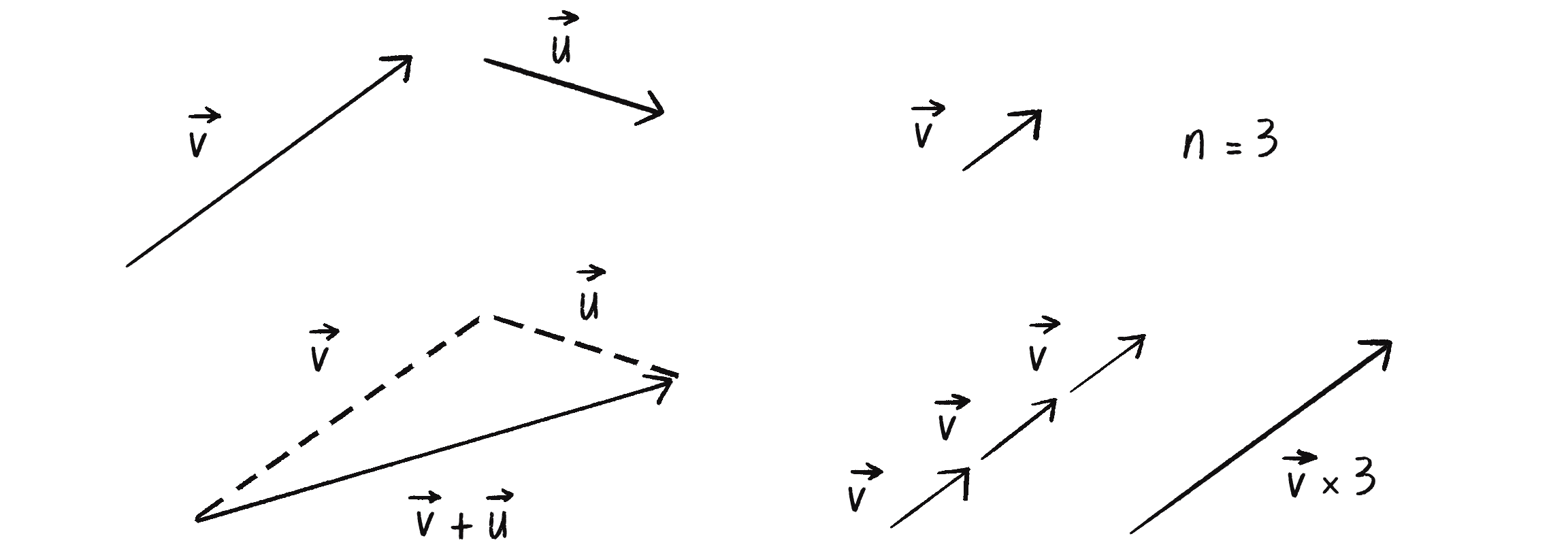

Notice that multiplication involves multiplying a vector by a scalar value. This makes sense; when you want a vector to be twice as large (but facing the same direction), multiply it by 2. When you want it to be half the size, multiply it by 0.5. However, several other multiplication-like operations involve a pair of vectors that are useful in certain scenarios—the dot product, the cross product, and something called the Hadamard product. For now, I’m going to focus on the dot product.

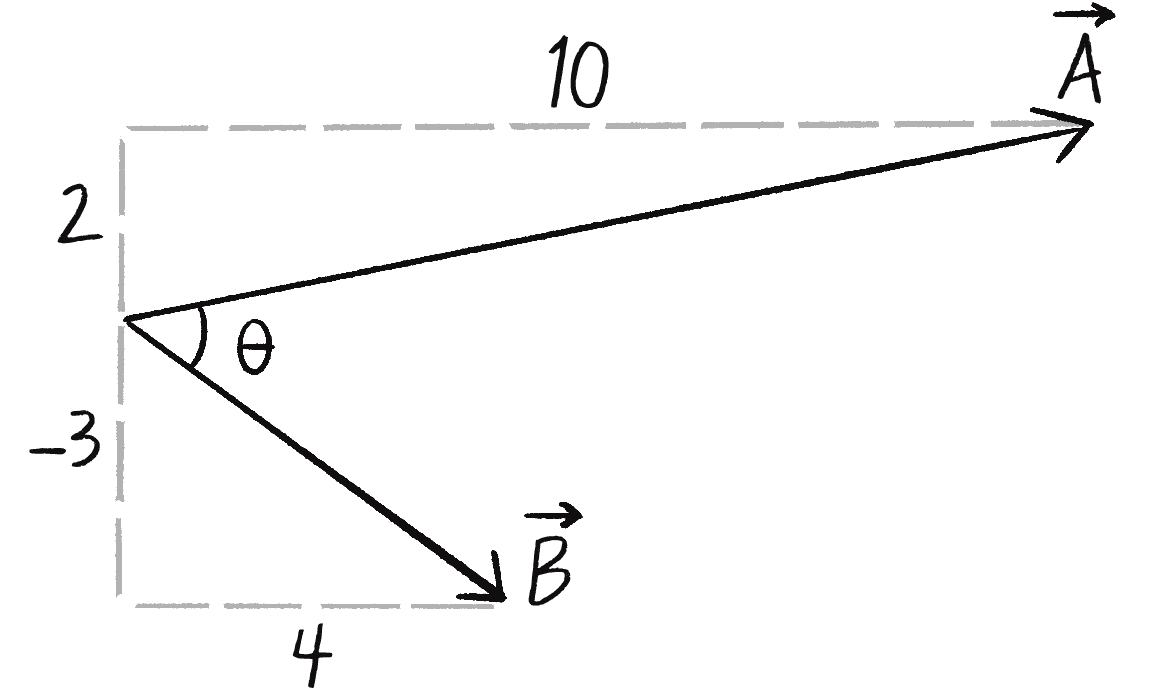

Assume vectors and :

The formula for the dot product (represented by the character) is as follows:

Crucially, the result of the dot product is a scalar value (a single number) and not a vector, even though the inputs are two vectors. For example, say you have these two vectors:

Their dot product is shown here:

In p5.js, this translates to the following:

let a = createVector(-3, 5);

let b = createVector(10, 1);

let n = a.dot(b);

The p5.Vector class includes a function to calculate the dot product.

If you look in the guts of the p5.Vector source code, you’ll find a pretty simple implementation of this dot() method:

dot(v) {

return this.x * v.x + this.y * v.y + this.z * v.z;

For 2D vectors, z is 0.

}

This formula is simple enough, but why is the dot product necessary, and when is it useful in coding? Well, one of the more common uses of the dot product is to find the angle between two vectors. In fact, the dot product can also be expressed as shown here:

In other words, the dot product of and is equal to the magnitude of times the magnitude of times the cosine of theta (with theta being the angle between the two vectors and ).

The two dot-product formulas can be derived from each other with trigonometry, but I’m happy not to follow that path and instead just operate on the following assumption:

This works since both sides of the equation equal . What does that assumption do for me? Say I have two vectors and :

In this scenario, I know the components of the vectors but don’t know the angle between them (see Figure 5.18). Using the dot-product formula, I can solve for the cosine of :

To solve for , I can take the inverse cosine, or arccosine (acos in p5.js), of the right side of the equation:

I’ll do the math now with actual numbers:

Here’s the p5.js version:

let a = createVector(10, 2);

let b = createVector(4, -3);

let angle = acos(a.dot(b) / (a.mag() * b.mag()));

Turns out, if you again dig into the guts of the p5.js source code, you’ll find a method called angleBetween that implements this exact algorithm.

angleBetween(v) {

let dot = this.dot(v);

let angle = Math.acos(dot / (this.mag() * v.mag()));

return angle;

}

Sure, I could have told you about this angleBetween() method to begin with, but understanding the dot product in detail will better prepare you for the upcoming path-following examples and help you see how the dot product fits into a concept called scalar projection.

Exercise 5.9

Create a sketch that shows the angle between two vectors.

There are a couple of things to note about dot products:

- If two vectors ( and ) are orthogonal (that is, perpendicular), their dot product () is equal to 0.

- If two vectors are unit vectors, their dot product is equal to the cosine of the angle between them. In other words, if and are of length 1.

Now that I’ve covered the fundamentals of the dot product, I can return to Reynolds’s path-following algorithm.

Simple Path Following

Figure 5.19 depicts all the ingredients of the path-following behavior. A lot of components are at play here beyond just a vehicle and target, so take some time to review the full diagram. I’ll then slowly unpack the algorithm piece by piece.

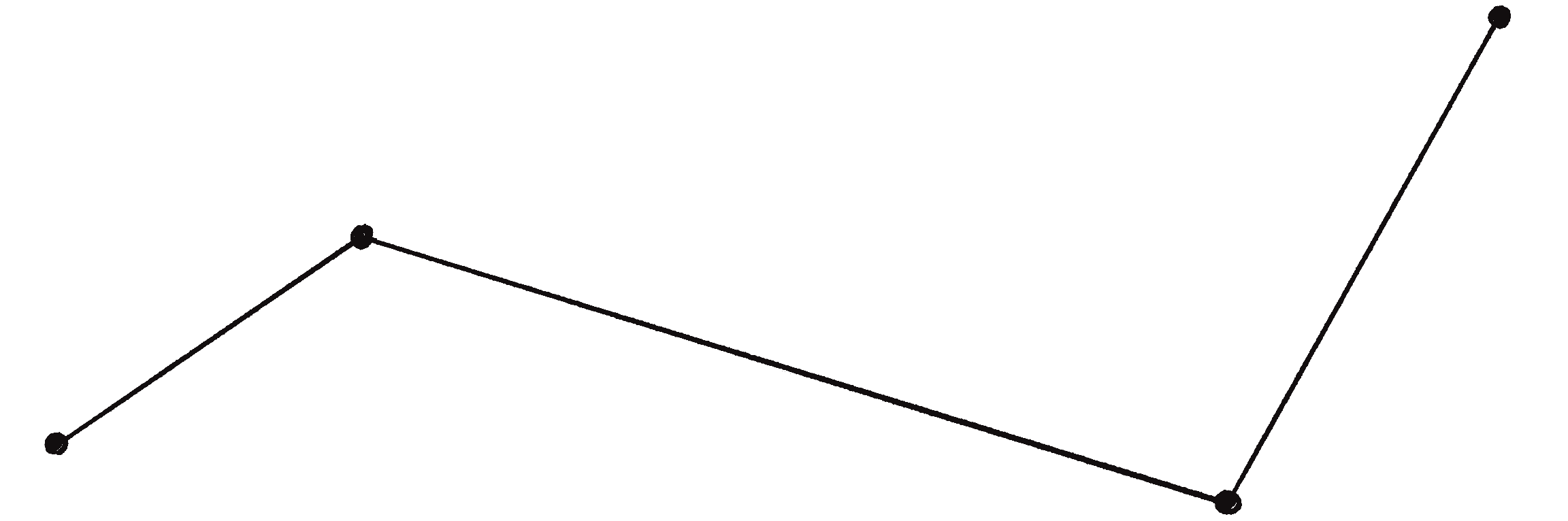

First, what do I mean by a path? Many techniques can be used to implement a path, but one simple way is to define a path as a series of connected points, as in Figure 5.20.

The simplest version of this path would be a line between two points (Figure 5.21).

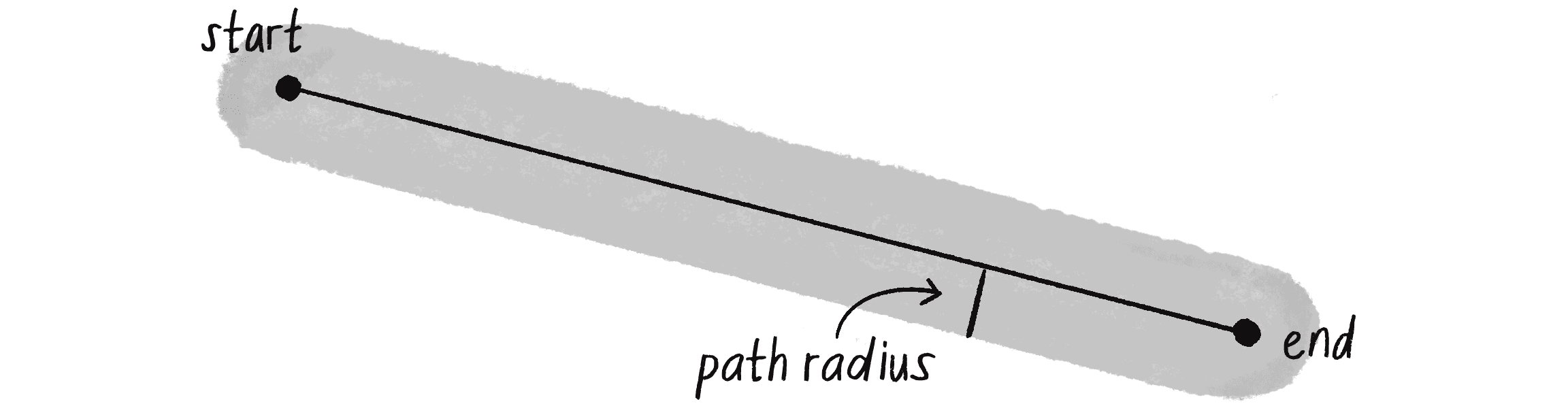

I’m also going to consider a path to have a radius. If the path is a road, the radius is the road’s width. With a smaller radius, vehicles have to follow the path more closely; a wider radius allows them to stray a bit more to either side of the path.

Now I’ll put this into a class.

Example 5.5: Creating a Path Object

class Path {

constructor() {

this.radius = 20;

A path has a radius, indicating its width.

this.start = createVector(0, height / 3);

this.end = createVector(width, (2 * height) / 3);

A path has only two points, start and end.

}

show() {

Display the path.

strokeWeight(this.radius * 2);

stroke(0, 100);

line(this.start.x, this.start.y, this.end.x, this.end.y);

strokeWeight(1);

stroke(0);

line(this.start.x, this.start.y, this.end.x, this.end.y);

}

}

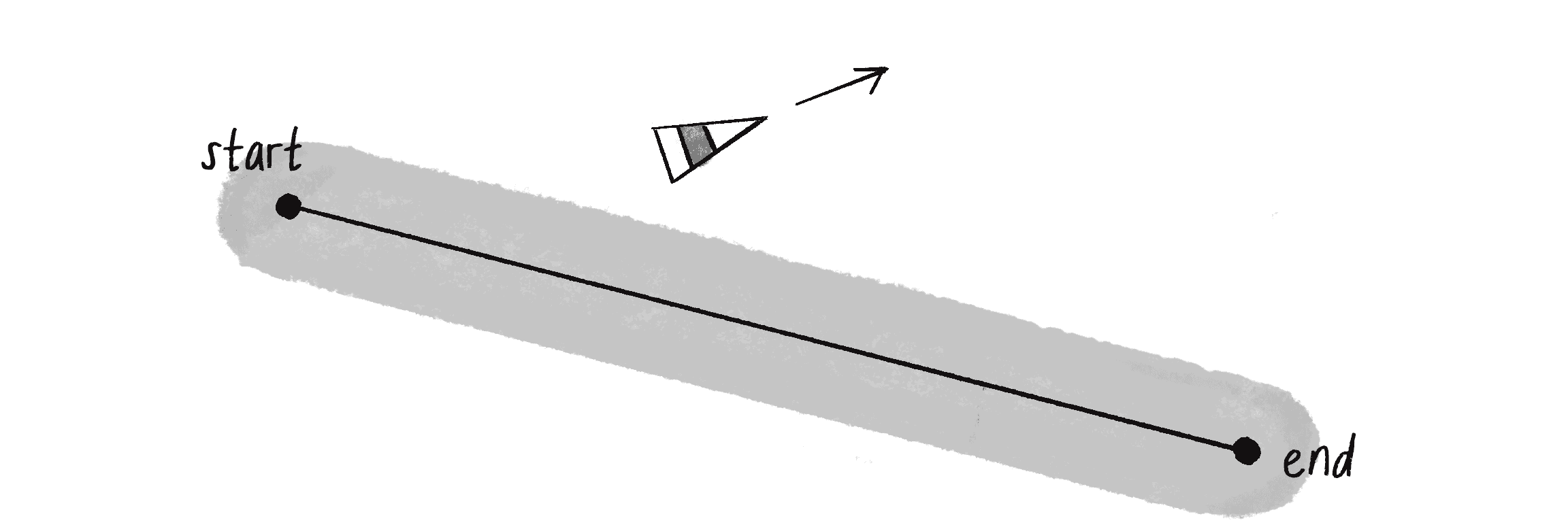

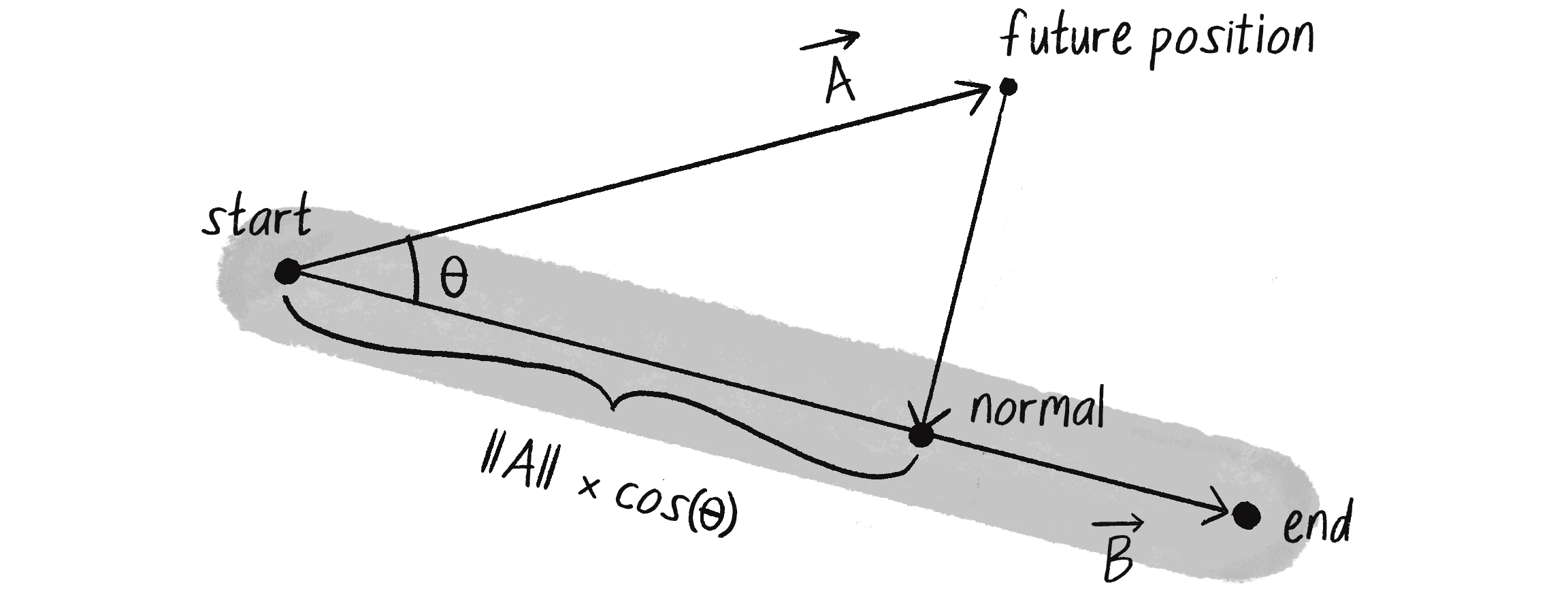

Now, assume that a vehicle is outside the path’s radius, moving with a velocity, as in Figure 5.22.

The first step is to predict (assuming a constant velocity) where that vehicle will be in the future:

let future = vel.copy();

Start by making a copy of the velocity.

future.setMag(25);

Look 25 pixels ahead by setting the magnitude.

future.add(this.position);

Add the vector to the position to find the future position.

Once I have that position, it’s time to determine the distance from that predicted position to the path. If it’s very far away, the vehicle has strayed from the path and needs to steer back toward it. If the vehicle is on the path, all is well and the vehicle can continue on its way.

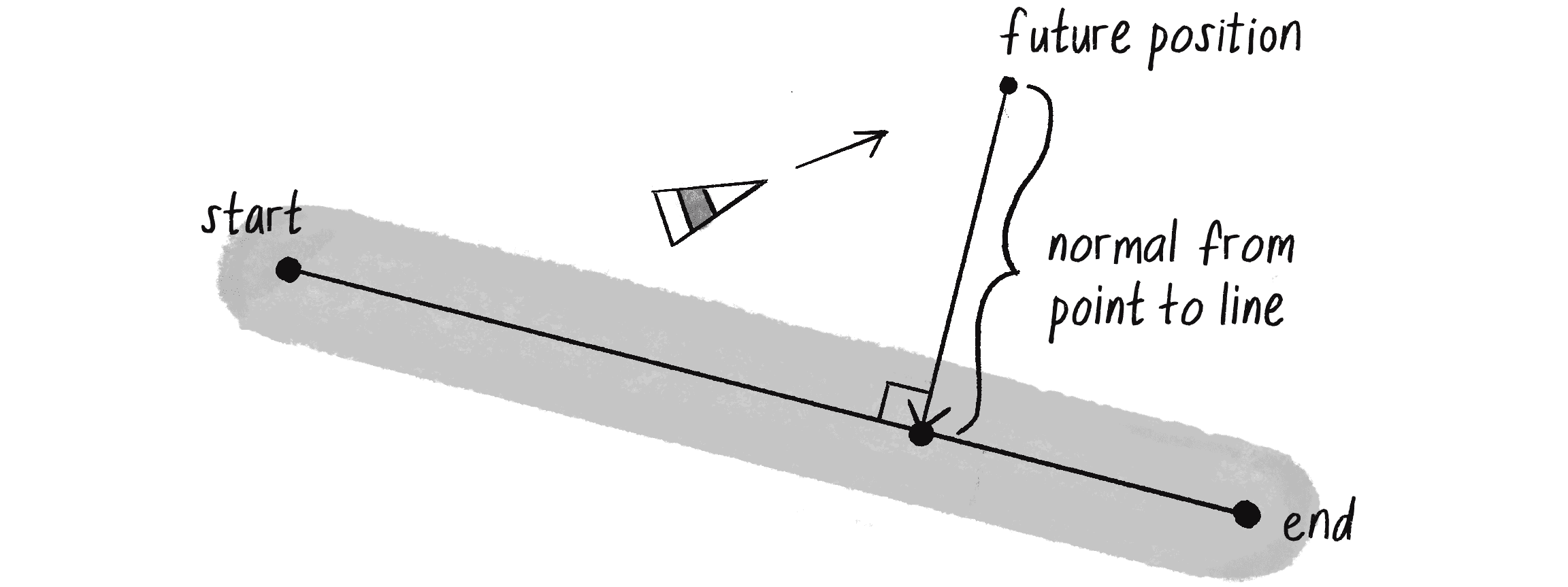

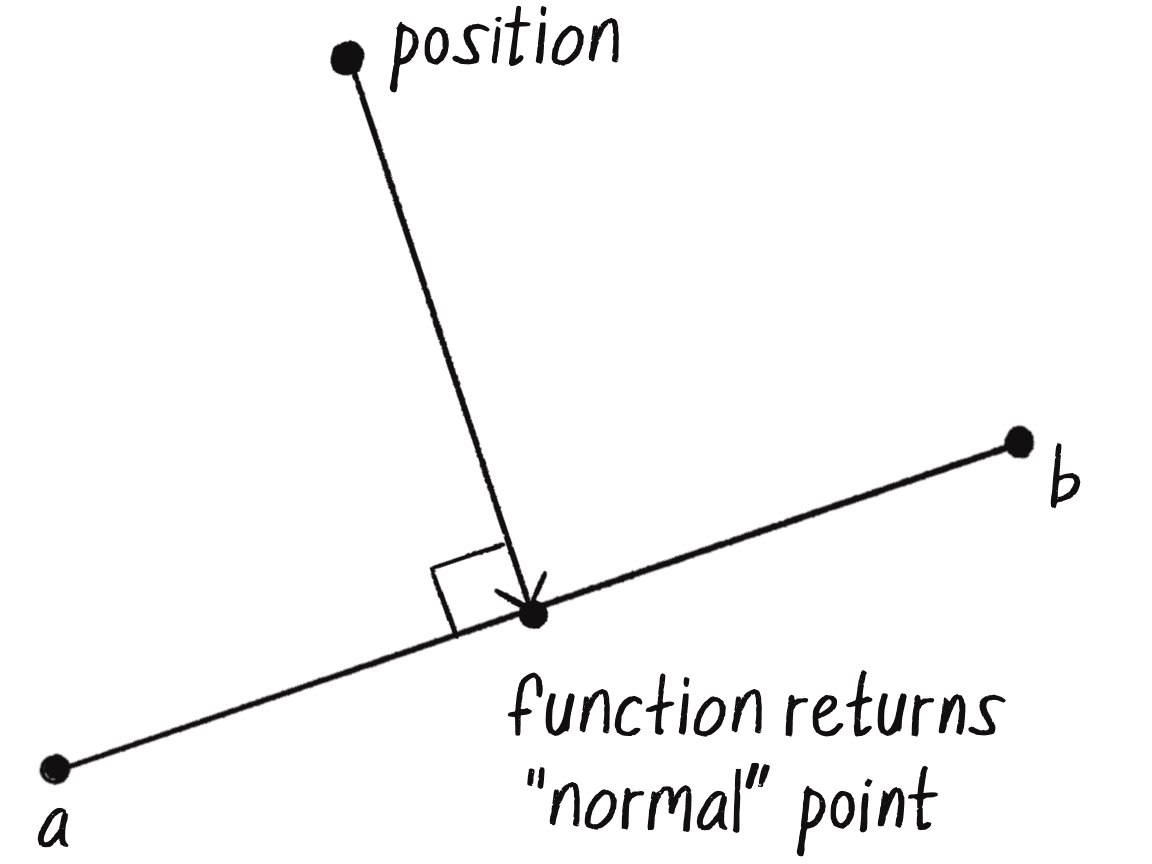

Essentially, I need to calculate the distance between a point (the future position) and a line (the path). That distance is defined as the length of the normal, a vector that extends from the point to the line and is perpendicular to the line (Figure 5.23).

How do I find the normal? First, I can define a vector (call it ) that extends from the path’s starting point to the vehicle’s future position:

let a = p5.Vector.sub(future, path.start);

Next, I can define a vector (call it ) that points from the start of the path to the end:

let b = p5.Vector.sub(path.end, path.start);

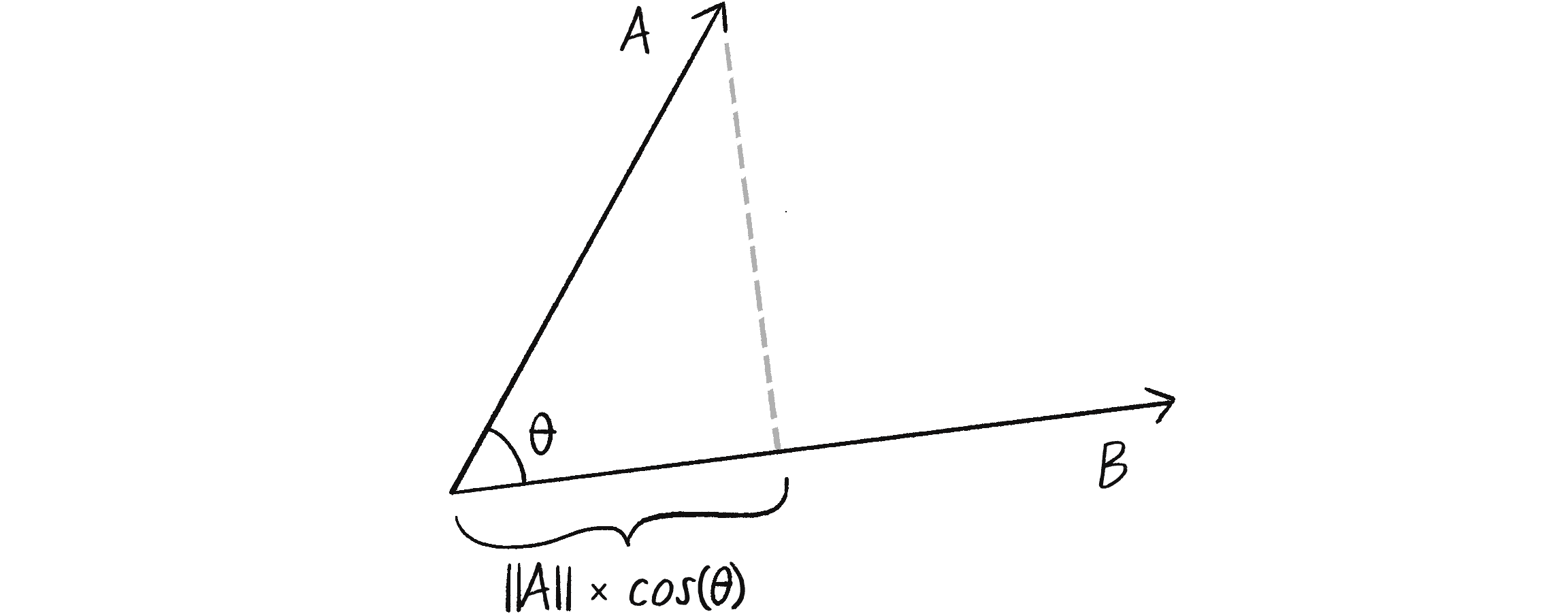

Now, with a little trigonometry (the cah in sohcahtoa), I can calculate the distance from the path’s start to the normal point. As shown in Figure 5.24, it’s .

If I only knew , I could find that normal point with the following code:

let d = a.mag() * cos(theta);

Get the distance from the start to the normal.

b.setMag(d);

Scale vector b to that distance.

let normalPoint = p5.Vector.add(path.start, b);

The normal point can be found by adding the scaled version of b to the path’s starting point.

Luckily, if the dot product has taught me anything, it’s that given two vectors, I can calculate the angle between those vectors!

let theta = p5.Vector.angleBetween(a, b);

What is theta? The angle between A and B!

let d = a.mag() * cos(theta);

b.setMag(d);

let normalPoint = p5.Vector.add(path.start, b);

While this code will work, I can make one more simplification. Looking again, you’ll see that the magnitude for vector is set to a.mag() * cos(theta), which is the code translation of the following:

And, recall this:

Now, what if is a unit vector of length 1? Then you have this:

Or, more simply:

When is a unit vector, is the same as the dot product of and . Turning b into a unit vector is as simple as calling normalize(). I can therefore bypass calculating theta with angleBetween() and simplify the code as follows:

let theta = p5.Vector.angleBetween(a, b);

let d = a.mag() * cos(theta);

b.setMag(d);

b.normalize();

b.setMag(a.dot(b));

Normalize b and use the dot product to set b’s length.

let normalPoint = p5.Vector.add(path.start, b);

This process of scaling according to the normal point is commonly known as scalar projection. We say that is the scalar projection of onto , as in Figure 5.25.

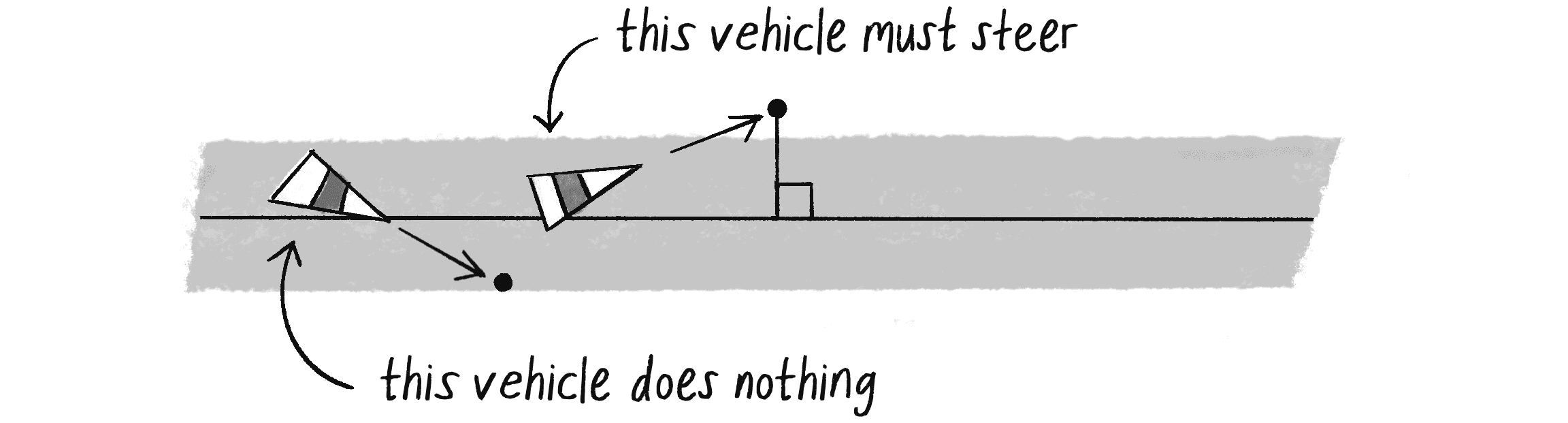

Once I have the normal point along the path, the next step is to decide whether and how the vehicle should steer toward the path. Reynolds’s algorithm states that the vehicle should steer toward the path only if it’s in danger of straying beyond the path—that is, if the distance between the normal point and the predicted future position is greater than the path’s radius. This is illustrated in Figure 5.26.

I can encode that logic with a simple if statement and use my earlier seek() method to steer the vehicle when necessary.

let distance = p5.Vector.dist(future, normalPoint);

if (distance > path.radius) {

If the vehicle is outside the path, seek the target.

this.seek(target);

The desired velocity and steering force can use the seek() method created in Example 5.1.

}

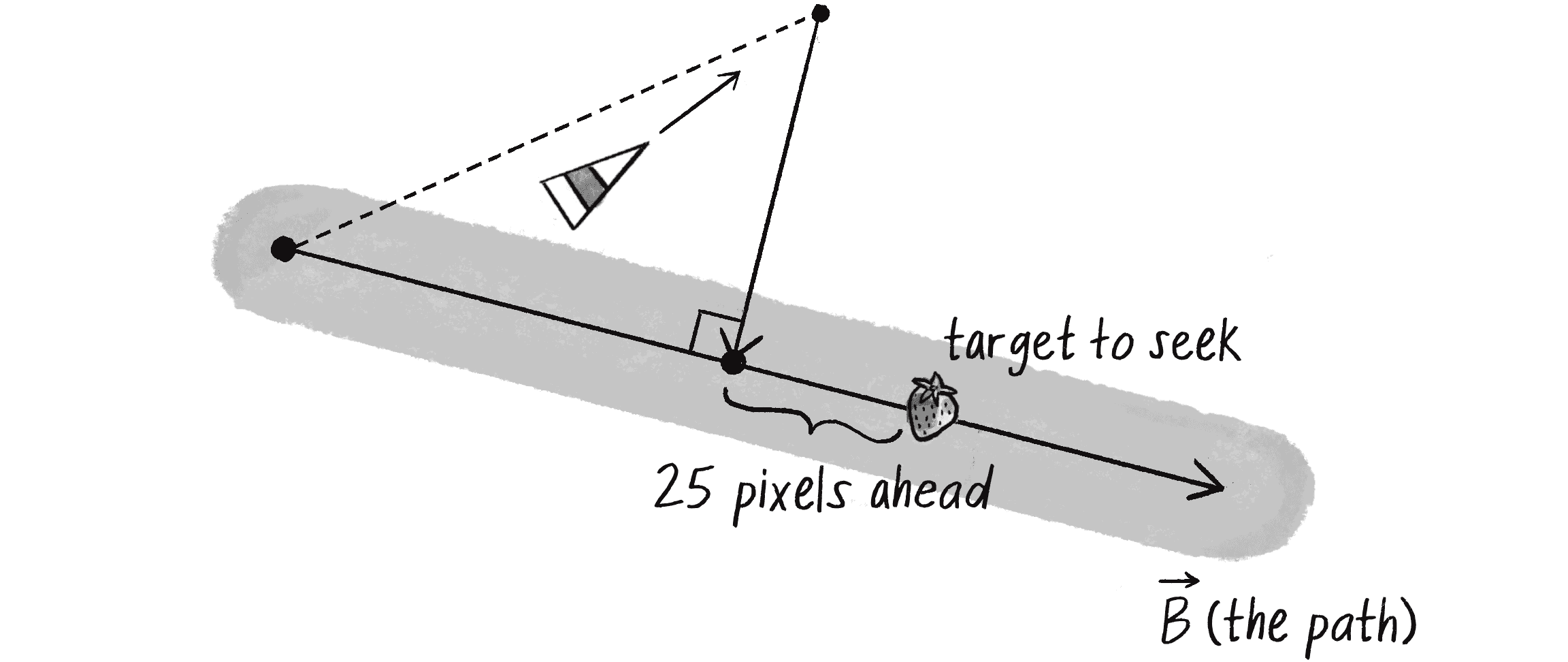

But what’s the target that the path follower is seeking? Reynolds’s algorithm involves picking a point ahead of the normal on the path. Since I know the vector that defines the path (), I can implement this point ahead by adding a vector that points in ’s direction to the vector representing the normal point, as in Figure 5.27.

I’ll arbitrarily say the target should be 25 pixels ahead of the normal:

let distance = p5.Vector.dist(future, normalPoint);

if (distance > path.radius) {

b.setMag(25);

Set the magnitude to 25 pixels (picked arbitrarily).

let target = p5.Vector.add(normalPoint, b);

Add b to normalPoint to find the target 25 pixels ahead on the path.

this.seek(target);

Seek the target.

}

Putting it all together, here’s the path-following method in the Vehicle class.

Example 5.6: Simple Path Following

follow(path) {

let future = this.velocity.copy();

future.setMag(25);

future.add(this.position);

Step 1: Predict the vehicle’s future position.

let normalPoint = getNormalPoint(future, path.start, path.end);

Step 2: Find the normal point along the path.

let b = p5.Vector.sub(path.end, path.start);

b.setMag(25);

let target = p5.Vector.add(normalPoint, b);

Step 3: Look a little farther along the path and set a target. (To optimize, this could be moved into the if statement to find the target only if it’s off the path.)

let distance = p5.Vector.dist(normalPoint, future);

if (distance > path.radius) {

this.seek(target);

Step 4: If you’re off the path, seek that target in order to get on the path.

}

}

getNormalPoint() function: position, a, and bNotice that instead of using all that dot-product and scalar projection code to find the normal point, I call the getNormalPoint() function. In cases like this, it’s useful to break out the code that performs a specific task (finding a normal point) into a function that can be called when required. The function takes three vector arguments (see Figure 5.28): the first defines a point p in Cartesian space (the vehicle’s future position), and the second and third define a line segment between two points a and b (the path).

getNormalPoint(position, a, b) {

let vectorA = p5.Vector.sub(position, a);

Vector that points from a to position

let vectorB = p5.Vector.sub(b, a);

Vector that points from a to b

vectorB.normalize();

vectorB.mult(vectorA.dot(vectorB));

Use the dot product for scalar projection.

let normalPoint = p5.Vector.add(a, vectorB);

Find the normal point along the line segment.

return normalPoint;

}

What do I have so far? I have a Path class that defines a path as a line between two points. I have a Vehicle class with a method to follow the path (using steering to seek a target along the path). In all, this makes for a decent example, and yet it’s pretty darn limiting. What’s missing?

Take a deep breath. You’re almost there.

Path Following with Multiple Segments

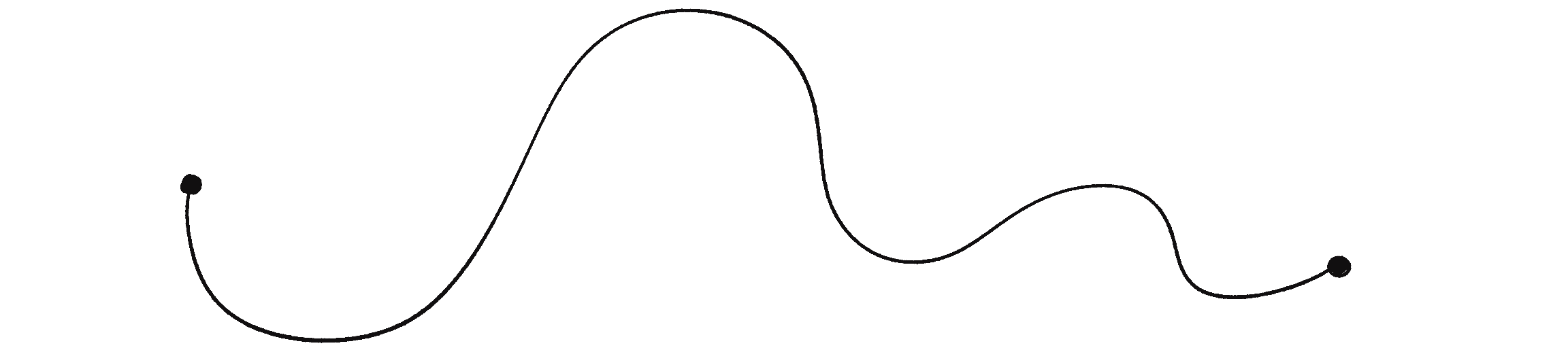

What if I want a vehicle to follow a more complex path than just a single straight line? Perhaps a curved path that moves in a variety of directions, as in Figure 5.29?

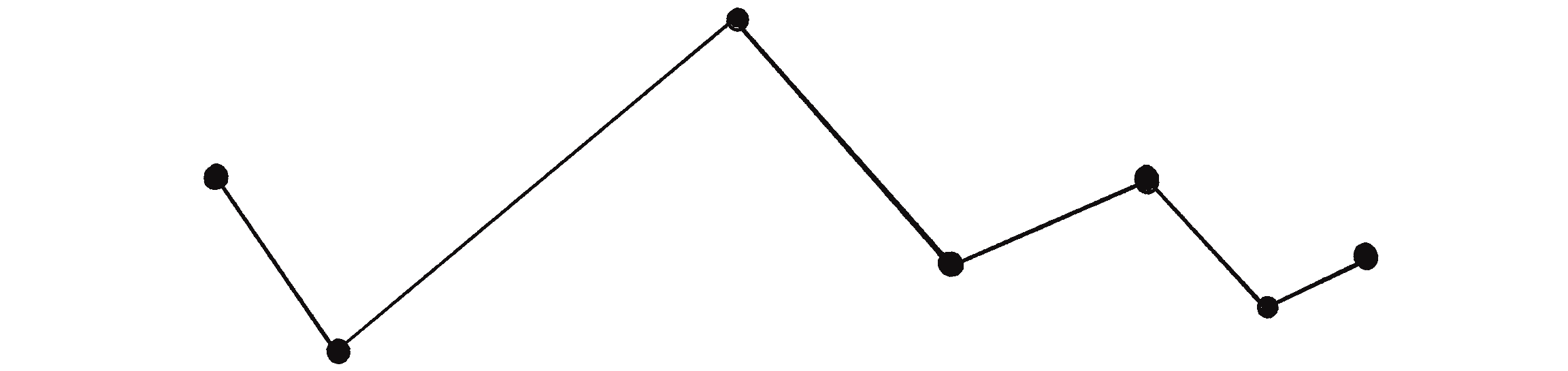

Maybe I’m being a little too ambitious. I could investigate algorithms for following a curved path, but I’m much less likely to end up needing a cool compress on my forehead if I stick with straight line segments, like those in Figure 5.30. I could always still draw the path as a curve, but it’s best to approximate it behind the scenes with simplified geometric forms for the necessary calculations.

If I made path following work with one line segment, how do I make it work with a series of connected line segments? The key is in the way I find the target point along the path.

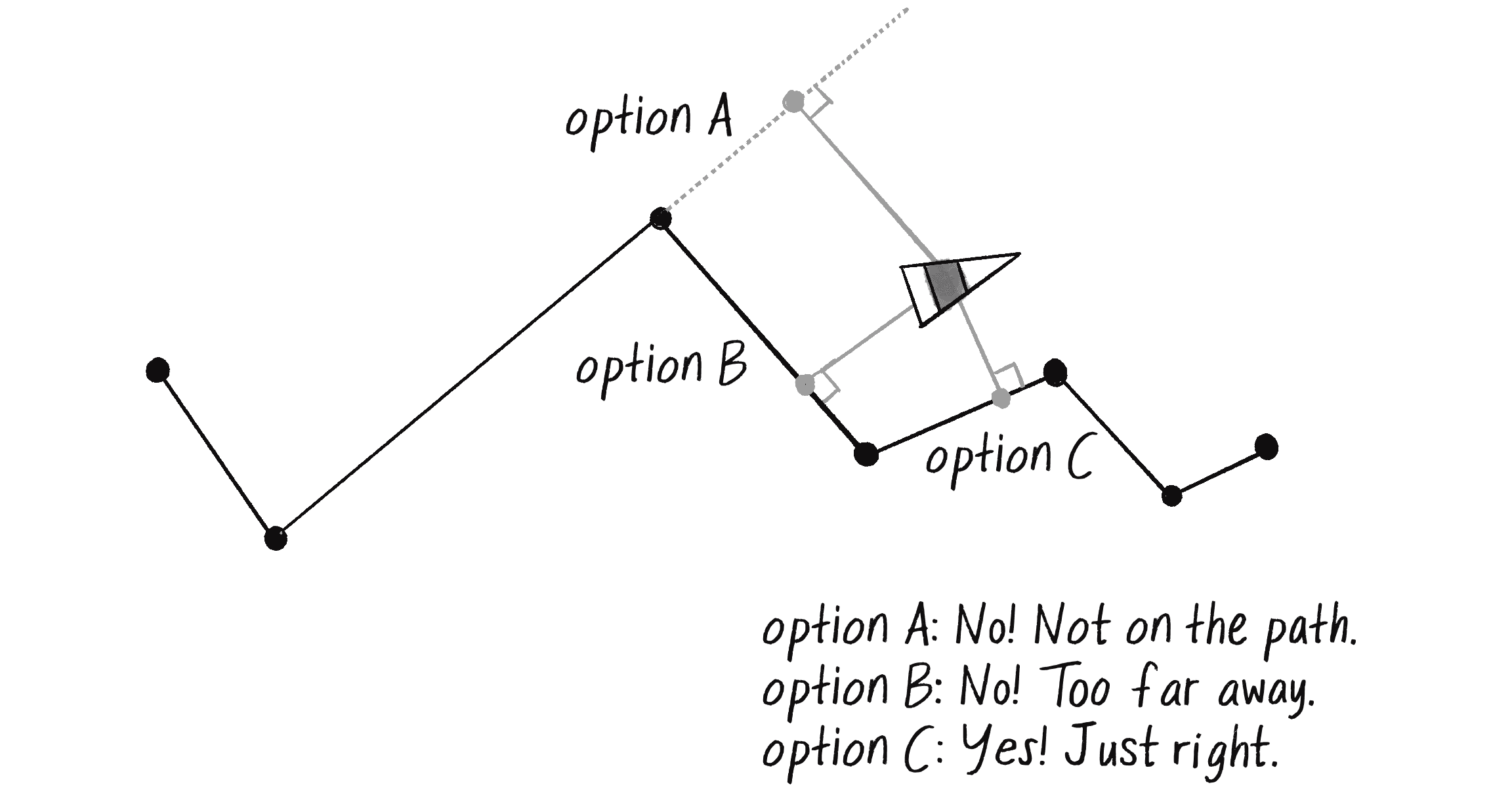

To find the target with just one line segment, I had to compute the normal to that line segment. Now that I have a series of line segments, I also have a series of normal points to be computed—one for each segment (see Figure 5.31). Which one does the vehicle choose? The solution Reynolds proposed is to pick the normal point that is (a) closest and (b) on the path.

If you have a point and an infinitely long line, you’ll always have a normal point that touches the line. But if you have a point and a finite line segment, you won’t necessarily find a normal that’s on the line segment. If this happens for any of the segments, I can disqualify those normals. Once I’m left with just those normals that are on the path (only two in Figure 5.31), I pick the one that’s shortest.

To write the code for this, I’ll expand the Path class to have an array of points (rather than just the start and end).

Example 5.7: Path Made of Multiple Line Segments

class Path {

constructor() {

this.radius = 20;

this.points = [];

A path is now an array of points (p5.Vector objects).

}

addPoint(x, y) {

let pathPoint = createVector(x, y);

this.points.push(pathPoint);

}

This method allows you to add points to the path.

show() {

stroke(200);

strokeWeight(this.radius * 2);

noFill();

beginShape();

for (let pathPoint of this.points) {

vertex(pathPoint.x, pathPoint.y);

}

endShape();

Draw a thicker gray line for the path radius.

stroke(0);

strokeWeight(1);

beginShape();

for (let pathPoint of this.points) {

vertex(pathPoint.x, pathPoint.y);

}

endShape();

Draw a thin line for the path center.

}

}

Now that the Path class has been updated, it’s the vehicle’s turn to learn how to accommodate multiple line segments. All it did before was find the normal for one line. Using a loop, it can find the normals for all the segments:

for (let i = 0; i < path.points.length - 1; i++) {

let a = path.points[i];

let b = path.points[i + 1];

let normalPoint = getNormalPoint(future, a, b);

Find the normal for each line segment.

The next step is to test whether the normal point is actually between points a and b. Since I know the path goes from left to right in this example, I can test whether the x component of normalPoint is outside the x components of a and b.

if (normalPoint.x < a.x || normalPoint.x > b.x) {

normalPoint = b.copy();

Use the end point of the segment as your normal point if you can’t find one.

}

If the normal point is not within the line segment, I’ll just pretend the end point of that line segment is the normal. (You might also try the beginning point, depending on the particulars of your path.) This will ensure that the vehicle always stays on the path, even if it strays beyond the bounds of the line segments.

Exercise 5.10

A more general-purpose way to test whether the normal point lies on the segment is to sum the distances between normalPoint and a and b. If the result is greater than the length of the line segment, the normal is outside the segment. Can you write this algorithm with p5.js?

Finally, I need to find the closest normal point to the vehicle. To accomplish this, I can start with a very high “world record” distance and iterate through each normal point to see if it beats (is less than) the record. Each time a normal point beats the record, the world record is updated, and the winning point is stored in a variable named target. At the end of the loop, target will hold the closest normal point.

Example 5.8: Path Following

let target = null;

let worldRecord = Infinity;

Start with a very high record that can easily be beaten, like infinity!

for (let i = 0; i < path.points.length - 1; i++) {

let a = path.points[i];

let b = path.points[i + 1];

let normalPoint = getNormalPoint(future, a, b);

if (normalPoint.x < a.x || normalPoint.x > b.x) {

normalPoint = b.copy();

}

let distance = p5.Vector.dist(future, normalPoint);

if (distance < worldRecord) {

worldRecord = distance;

target = normalPoint.copy();

}

If you beat the record, this should be your target.

let dir = p5.Vector.sub(b, a);

dir.setMag(25);

target.add(dir);

Look at the direction of the line segment in order to seek a little bit ahead of the normal.

}

You may have noticed the use of Infinity to initialize worldRecord. In JavaScript, Infinity is a special numeric value that represents, well, infinity. It works in this case because I need a starting value that will always be higher than any plausible distance calculated in the code. The first calculated distance will always set a new world record, against which all the others will be compared.

I also want to highlight the hardcoded value of 25, which sets the distance ahead on the path from the normal for the target. Reynolds indicates that this value should be dynamic and calculated based on the vehicle’s distance to the path and its speed. Give this a try and see how it improves the accuracy or responsiveness of the path-following behavior!

Exercise 5.11

Create a path that changes over time. Can the points that define the path have their own steering behaviors?

Complex Systems

I said the purpose of this chapter is to breathe life into the things that move around p5.js canvases. You’ve come a long way by learning to write the code for an autonomous agent and playing with examples of that agent’s individual behaviors. But this is no place to stop. Yes, a vehicle is a simulated being that makes decisions about how to seek and flow and follow. But what is a life led alone, without the love and support of others?

And so, as a logical next step, I’ll take the work I’ve done developing behaviors for individual autonomous agents and apply it to simulations that involve many autonomous agents operating in parallel—agents that have an ability to perceive not only their physical environment but also the actions of their fellow agents, and then act accordingly. In other words, I want to create complex systems with p5.js.

A complex system is typically defined as a system that’s more than the sum of its parts. While the individual elements of the system may be incredibly simple and easily understood, the behavior of the system as a whole can be highly complex, intelligent, and difficult to predict.

Think, for example, about a tiny, crawling ant—one single ant. An ant is an autonomous agent; it can perceive its environment (using antennae to gather information about the direction and strength of chemical signals) and make decisions about how to move based on those signals. But can a single ant acting alone build a nest, gather food, or defend its queen? An ant is a simple unit that can perceive only its immediate environment. A colony of ants, however, is a sophisticated, complex system, a superorganism of components that work together to accomplish difficult, complicated goals.

Here are three key principles that will guide my work with complex systems:

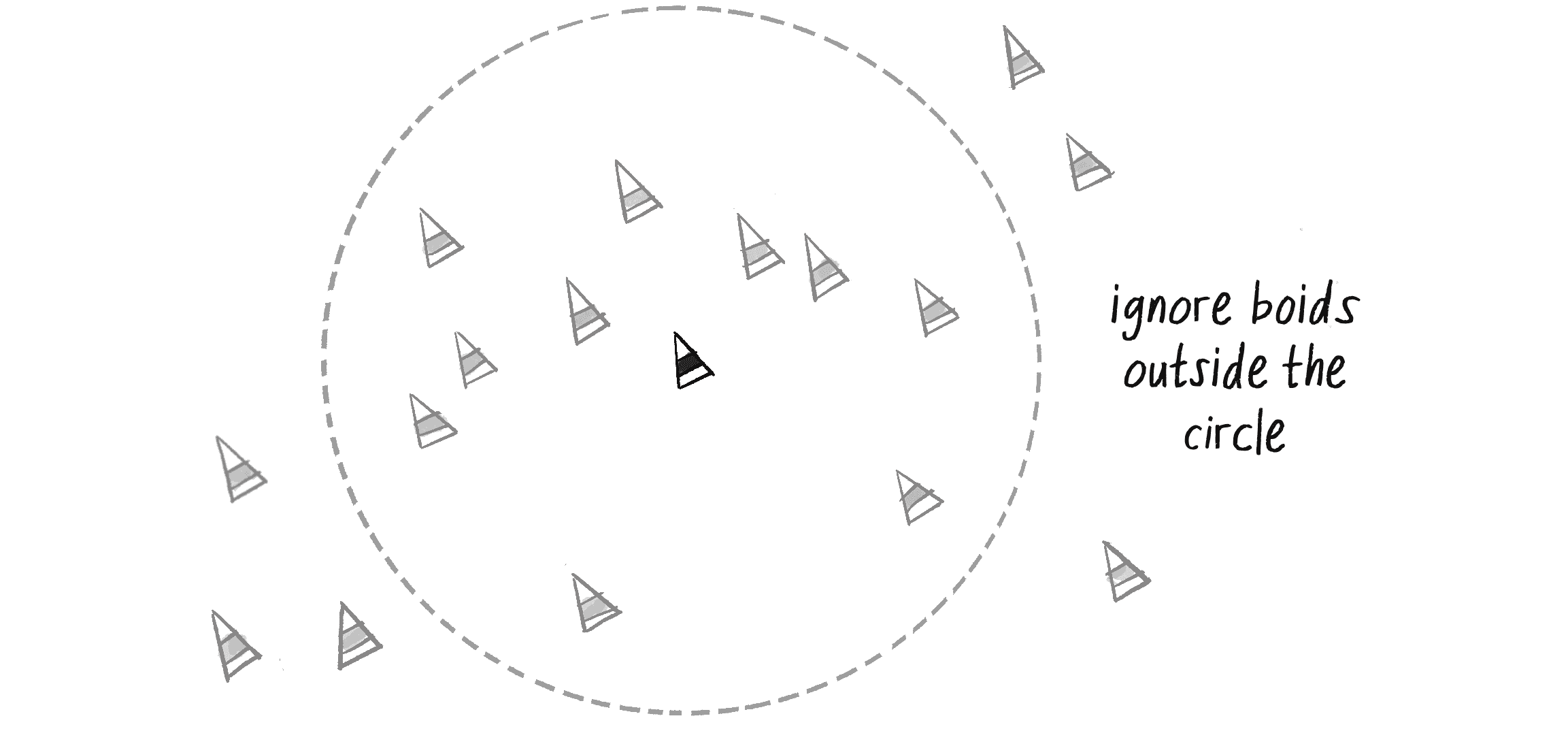

- Simple units have short-range relationships. This is what I’ve been building all along: vehicles that have a limited perception of their environment.

- Simple units operate in parallel. For every cycle through the

draw()loop, each unit will calculate its own steering forces. This will create the appearance of all the units working in parallel. - Systems as a whole exhibit emergent phenomena. Complex behaviors, patterns, and intelligence can emerge from the interactions among simple units. This phenomenon occurs in nature, such as in ant colonies, migration patterns, earthquakes, and snowflakes. The question is whether the same results can be achieved in a p5.js sketch.

Beyond these core principles, three additional qualities of complex systems will help frame the discussion, as well as provide guidelines for features to include in a software simulation. It’s important to acknowledge that this is a fuzzy set of characteristics, and not all complex systems have all

of them:

- Nonlinearity: This aspect of complex systems is often casually referred to as the butterfly effect, coined by mathematician and meteorologist Edward Norton Lorenz, a pioneer in the study of chaos theory. In 1961, Lorenz was running a computer weather simulation for the second time and, perhaps to save a little time, typed in a starting value of 0.506 instead of 0.506127. The end result was completely different from the first result of the simulation. Stated more evocatively, the theory is that a single butterfly flapping its wings on the other side of the world could cause a massive weather shift and ruin your weekend at the beach. It’s called nonlinear because there isn’t a linear relationship between a change in initial conditions and a change in outcome. A small change in initial conditions can have a massive effect on the outcome. Nonlinear systems are a superset of chaotic systems. In Chapter 7, you’ll see how even in a system of many 0s and 1s, if you change just one bit, the result will be completely different.

- Competition and cooperation: One ingredient that often makes a complex system tick is the presence of both competition and cooperation among the elements. The upcoming flocking system will have three rules: alignment, cohesion, and separation. Alignment and cohesion will ask the elements to “cooperate” by trying to stay together and move together. Separation, however, will ask the elements to “compete” for space. When the time comes, try taking out just the cooperation or just the competition, and you’ll see how the system loses its complexity. Competition and cooperation are found together in living complex systems, but not in nonliving complex systems like the weather.

- Feedback: Complex systems often include a loop that feeds the output of the system back into the system to influence its behavior in a positive or negative direction. Let’s say you decide to take public transportation to work each day because it’s the most reliable and cost-effective solution, and you’re put off by the traffic congestion and environmental impact of driving. You aren’t alone; others turn to public transportation too. The system grows more efficient and attractive, serving more people with the same resources, and meanwhile, vehicle traffic is reduced. Over time, however, the system may struggle to accommodate the rising demand, leading to overcrowding, delays, and increased fares to fund infrastructure improvements. As a result, you and others start to switch back to driving, thereby increasing traffic congestion once again and reducing public transport’s efficiency. As traffic worsens, the funds from increased fares are (hopefully) used to improve public transport infrastructure, making it more appealing once again. In this way, the cost and efficiency of public transportation are both the input of the system (determining whether you choose to use it or not) and the output (the degree of traffic congestion and subsequent cost and efficiency). Economic models are just one example of a human complex system. Others include fads and trends, elections, crowds, and traffic flow.

Complexity will serve as a key theme for much of the remainder of the book. In this section, I’ll begin by introducing an additional feature to the Vehicle class: the ability to perceive neighboring vehicles. This enhancement will pave the way for a culminating example of a complex system in which the interplay of simple individual behaviors results in an emergent behavior: flocking.

Implementing Group Behaviors (or: Let’s Not Run Into Each Other)

Managing a group of objects is certainly not a new concept. You’ve seen this before—in Chapter 4, where I developed the Emitter class to represent an overall particle system. There, I used an array to store a list of individual particles. I’ll start with the same technique here and store Vehicle objects in an array:

let vehicles;

Declare an array of Vehicle objects.

function setup() {

vehicles = [];

for (let i = 0; i < 100; i++) {

vehicles.push(new Vehicle(random(width), random(height)));

Initialize and fill the array with a bunch of vehicles.

}

}

Now, when it comes time to manipulate all the vehicles in draw(), I can loop through the array and call the necessary methods:

function draw() {

for (let vehicle of vehicles) {

vehicle.update();

vehicle.show();

}

}

Maybe I want to add a behavior, a force to be applied to all the vehicles. This could be seeking the mouse:

vehicle.seek(mouseX, mouseY);

But that’s an individual behavior, and I’ve already spent the bulk of this chapter worrying about individual behaviors. You’re here because you want to apply a group behavior. I’ll begin with separation, a behavior that commands, “Avoid colliding with your neighbors!”

vehicle.separate();

That looks good but is not quite right. What’s missing? In the case of seek(), I said, “Seek mouseX and mouseY.” In the case of separate(), I’m saying, “Separate from everyone else.” Who is everyone else? It’s the list of all the other vehicles:

vehicle.separate(vehicles);

This is the big leap beyond what you saw before with particle systems. Instead of each element (particle or vehicle) operating on its own, I’m now saying, “Hey you, that vehicle there! When it comes time for you to operate, you need to operate with an awareness of everyone else. So I’m going to go ahead and pass you the list of everyone else.”

Putting together what I’ve done so far, here are the setup() and draw() functions for a sketch that exhibits group behavior:

let vehicles;

function setup() {

createCanvas(640, 240);

vehicles = [];

for (let i = 0; i < 100; i++) {

vehicles.push(new Vehicle(random(width), random(height)));

}

}

function draw() {

background(255);

for (let vehicle of vehicles) {

vehicle.separate(vehicles);

This is really the only new thing you’re doing in this section. You’re asking a Vehicle object to examine all the other vehicles in the process of calculating a separation force.

vehicle.update();

vehicle.show();

}

}

Of course, this is just the beginning. The real work happens inside the separate() method. Reynolds defines the separation behavior as “steer to avoid crowding.” In other words, if a given vehicle is too close to you, steer away from that vehicle. Sound familiar? Remember the seek behavior, steering a vehicle toward a target? Reverse that force and you have the flee behavior, which is what should be applied here to achieve separation (see Figure 5.32).

But what if more than one vehicle is too close? In that case, I’ll define separation as the average of all the vectors pointing away from any close vehicles (Figure 5.33).

How do I turn that into code? Remember, I’m writing a method called separate() that receives an array of Vehicle objects as an argument:

separate(vehicles) {

}

Inside this method, I’ll loop through all the vehicles and see if any are too close:

let desiredSeparation = 20;

for (let other of vehicles) {

This variable specifies how close is too close.

let d = p5.Vector.dist(this.position, other.position);

What is the distance between this vehicle and the other vehicle?

if (this !== other && d < desiredSeparation) {

Any code here will be executed if the vehicle is within 20 pixels.

}

}

Notice that I’m checking not only whether the distance is less than a desired separation but also whether this is not equal to other. This is a key element. Remember, all the vehicles are in the array; without this extra check, the vehicle will attempt to flee from itself!

If the vehicles are too close, I compute a vector that points away from the offending vehicle:

if (this !== other && d < desiredseparation) {

let diff = p5.Vector.sub(this.position, other.position);

diff.normalize();

A vector pointing away from the other’s position

}

This isn’t enough. I have a fleeing vector now, but what I really need is the average of the fleeing vectors for all the vehicles that are too close. How do I compute an average? Add up all the vectors and divide by the total:

let sum = createVector();

Start with an empty vector.

let count = 0;

We have to keep track of how many vehicles are too close.

for (let other of vehicles) {

let d = p5.Vector.dist(this.position, other.position);

if (this !== other && d < desiredseparation) {

let diff = p5.Vector.sub(this.position, other.position);

diff.normalize();

sum.add(diff);

count++;

Add all the vectors together and increment the count.

}

}

if (count > 0) {

Make sure that there is at least one close vehicle. You don’t want to bother doing anything if nothing is too close (not to mention, you can’t divide by zero!).

sum.div(count);

}

Once I have the average vector (stored in the variable sum), that vector can be scaled to the maximum speed and become the desired velocity—the vehicle desires to move in that direction at maximum speed! (In fact, I really don’t have to divide by count anymore since the magnitude is set manually.) And once I have the desired velocity, it’s the same old Reynolds story—steering equals desired minus velocity:

if (count > 0) {

sum.setMag(this.maxspeed);

Scale average to max speed (this becomes desired).

let steer = p5.Vector.sub(sum, vel);

Reynolds’s steering formula

steer.limit(this.maxforce);

this.applyForce(steer);

Apply the force to the vehicle.

}

Here’s the method in its entirety.

Example 5.9: Separation

separate(vehicles) {

let desiredSeparation = this.r * 2;

The desired separation is based on the vehicle’s size.

let sum = createVector();

let count = 0;

for (let other of vehicles) {

let d = p5.Vector.dist(this.position, other.position);

if (this !== other && d < desiredSeparation) {

let diff = p5.Vector.sub(this.position, other.position);

diff.setMag(1 / d);

What is the magnitude of the p5.Vector pointing away from the other vehicle? The closer it is, the more the vehicle should flee. The farther, the less. So the magnitude is set to be inversely proportional to the distance.

sum.add(diff);

count++;

}

}

if (count > 0) {

sum.setMag(this.maxspeed);

let steer = p5.Vector.sub(sum, this.velocity);

steer.limit(this.maxforce);

this.applyForce(steer);

}

}

The separate() method includes two extra improvements. First, the desired separation now depends on the size of the vehicle, as opposed to an arbitrary constant. This way, the separation behavior adapts dynamically to the individual characteristics of the vehicles. Second, the magnitude of the vector pointing away from a neighboring vehicle is set to be inversely proportional to the distance. This means that the closer the neighbor, the more the vehicle wants to flee, and vice versa.

Exercise 5.12

Create a cohere() method that follows the opposite logic of separate(): if a vehicle is beyond a certain distance, steer toward that vehicle. This will keep the group together. (In a moment, I’ll look at what happens when both cohesion and separation play out together in the same simulation.)

Exercise 5.13

Add the separation force to path following to create a simulation of Reynolds’s group path following.

Combining Behaviors

The most exciting and intriguing group behaviors come from mixing and matching multiple steering forces. After all, how could I even begin to simulate emergence in a complex system through a sketch that has only one rule?

When multiple steering forces are at play, I need a mechanism for managing them all. You may be thinking, “This is nothing new. We juggle multiple forces all the time.” You would be right. In fact, this technique appeared as early as Chapter 2:

let wind = createVector(0.001, 0);

let gravity = createVector(0, 0.1);

mover.applyForce(wind);

mover.applyForce(gravity);

Here, a Mover object responds to two forces. This all works nicely because of the way the Mover class was designed to accumulate the force vectors into its acceleration vector. In this chapter, however, the forces stem from the internal desires of the movers (now called vehicles). And those desires can be weighted so that some hold more sway than others. For example, consider a sketch in which all vehicles have two desires:

- Seek the mouse position.

- Separate from any vehicles that are too close.

Imagine the vehicles represent a school of fish. Although the fish want to avoid colliding with one another, their primary concern is seeking out a food source (the mouse). Being able to adjust the weights of the two steering forces is crucial to achieving this effect.

To begin, I’ll add a method called applyBehaviors() to the Vehicle class to manage all the behaviors:

applyBehaviors(vehicles) {

this.separate(vehicles);

this.seek(createVector(mouseX, mouseY));

}

Here, a single method takes care of calling the other methods that apply the forces—separate() and seek(). I could start mucking around within those methods to adjust the strength of the forces they’re calculating, but it might be easier to instead ask those methods to simply calculate and return the forces. Then I can adjust the forces’ strength and apply them to the vehicle’s acceleration within applyBehaviors():

applyBehaviors(vehicles) {

let separate = this.separate(vehicles);

let seek = this.seek(createVector(mouseX, mouseY));

this.applyForce(separate);

this.applyForce(seek);

Apply the forces here since seek() and separate() no longer do so.

}

Here’s how this new approach changes the seek() method:

seek(target) {

let desired = p5.Vector.sub(target, this.position);

desired.setMag(this.maxspeed);

let steer = p5.Vector.sub(desired, this.velocity);

steer.limit(this.maxforce);

this.applyForce(steer);

return steer;

Instead of applying the force, return the vector.

}

This change is subtle but incredibly important: it allows the strength of these forces to be weighted all in one place.

Example 5.10: Combining Steering Behaviors (Seek and Separate)

applyBehaviors(vehicles) {

let separate = this.separate(vehicles);

let seek = this.seek(createVector(mouseX, mouseY));

separate.mult(1.5);

seek.mult(0.5);

These values can be whatever you want them to be! They can be variables that are customized for each vehicle, or they can change over time.

this.applyForce(separate);

this.applyForce(seek);

}

In this code, I use mult() to adjust the forces. By multiplying each force vector by a factor, its magnitude is scaled accordingly. These factors (in this case, 1.5 for separate and 0.5 for seek) represent the weight assigned to each force. However, the weights don’t have to be constants. Think about how they might vary dynamically based on conditions within the environment or properties of the vehicle. For example, what if the seek weight increases when the vehicle detects food nearby (imagine the vehicle as a creature with a hunger property) or the separate weight becomes larger if the vehicle enters a crowded area? This flexibility in adjusting the weights allows for more sophisticated and nuanced behaviors to emerge.

Exercise 5.14

Modify Example 5.10 so that the behavior weights change over time. For example, what if the weights were calculated according to a sine wave or Perlin noise? Or what if some vehicles are more concerned with seeking and others are more concerned with separating? Can you introduce other steering behaviors as well?

Flocking

Flocking is a group animal behavior found in many living creatures, such as birds, fish, and insects. In 1986, Reynolds created a computer simulation of flocking behavior and documented the algorithm in his paper “Flocks, Herds, and Schools: A Distributed Behavioral Model.” Re-creating this simulation in p5.js will bring together all the concepts in this chapter:

- I will use the steering force formula (steer = desired – velocity) to implement the rules of flocking.

- These steering forces will be group behaviors and will require each vehicle to perceive all the other vehicles.

- I will combine and weight multiple forces.

- The result will be a complex system—intelligent group behavior will emerge from the simple rules of flocking without the presence of a centralized system or leader.

The good news is, I’ve already demonstrated items 1 through 3 in this chapter, so this section can just be about putting it all together and seeing the result.

Before I begin, I should mention that I’m going to change the name of the Vehicle class (yet again). Reynolds uses the term boid (a made-up word that refers to a birdlike object) to describe the elements of a flocking system. I’ll do the same.

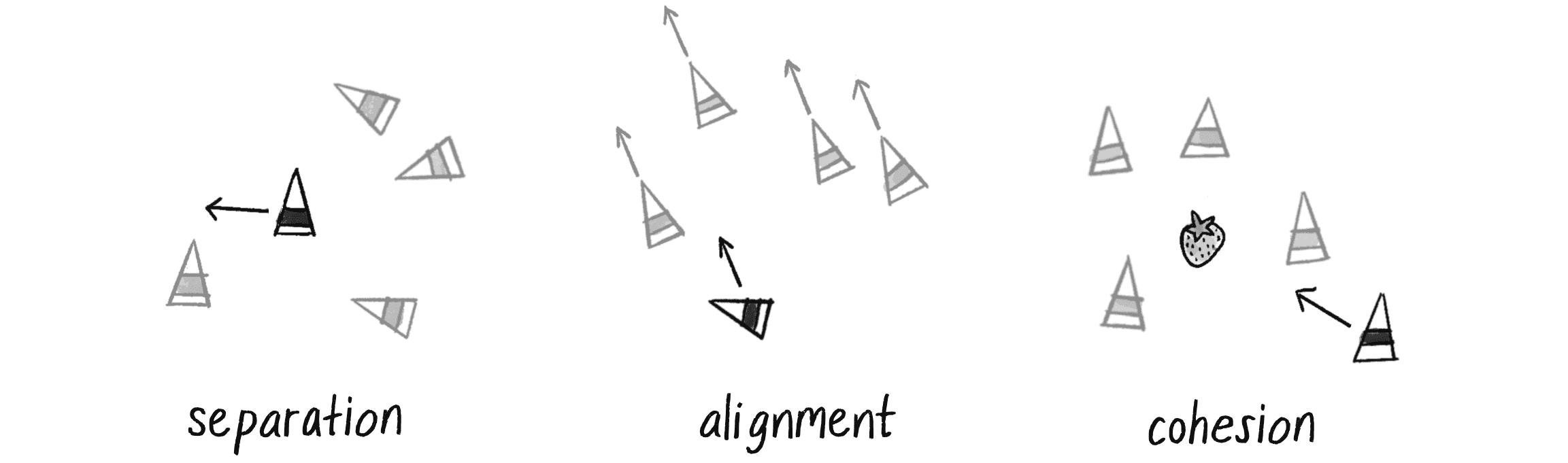

Three rules govern flocking:

- Separation (aka avoidance): Steer to avoid colliding with your neighbors.

- Alignment (aka copy): Steer in the same direction as your neighbors.

- Cohesion (aka center): Steer toward the center of your neighbors (stay with the group).

Figure 5.34 illustrates these rules.

Just as with Example 5.10, in which I combined separation and seeking, I want the Boid objects to have a single method that manages all three behaviors. I’ll call it flock():